Optimism - A Holistic Research Report

A complete research report on Optimism made by redacted minds

Executive Summary:

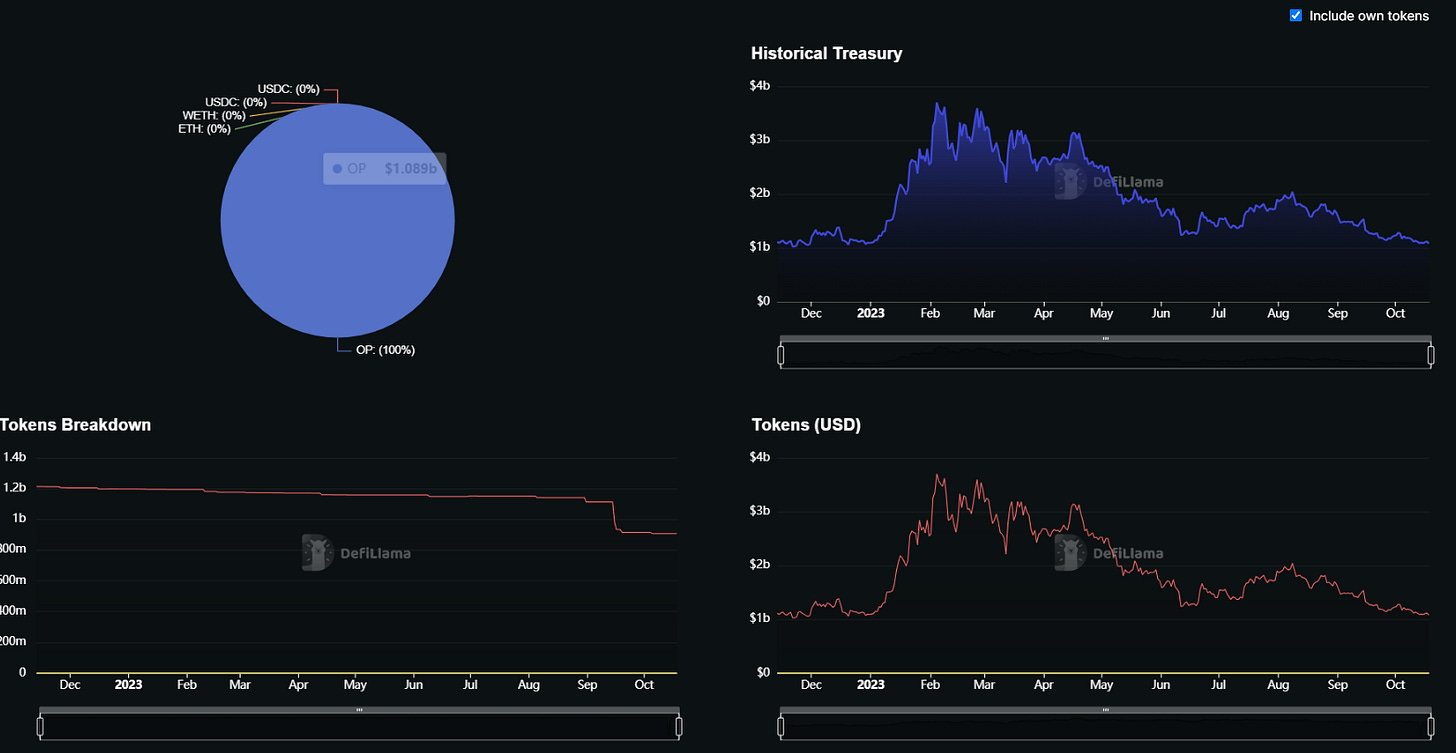

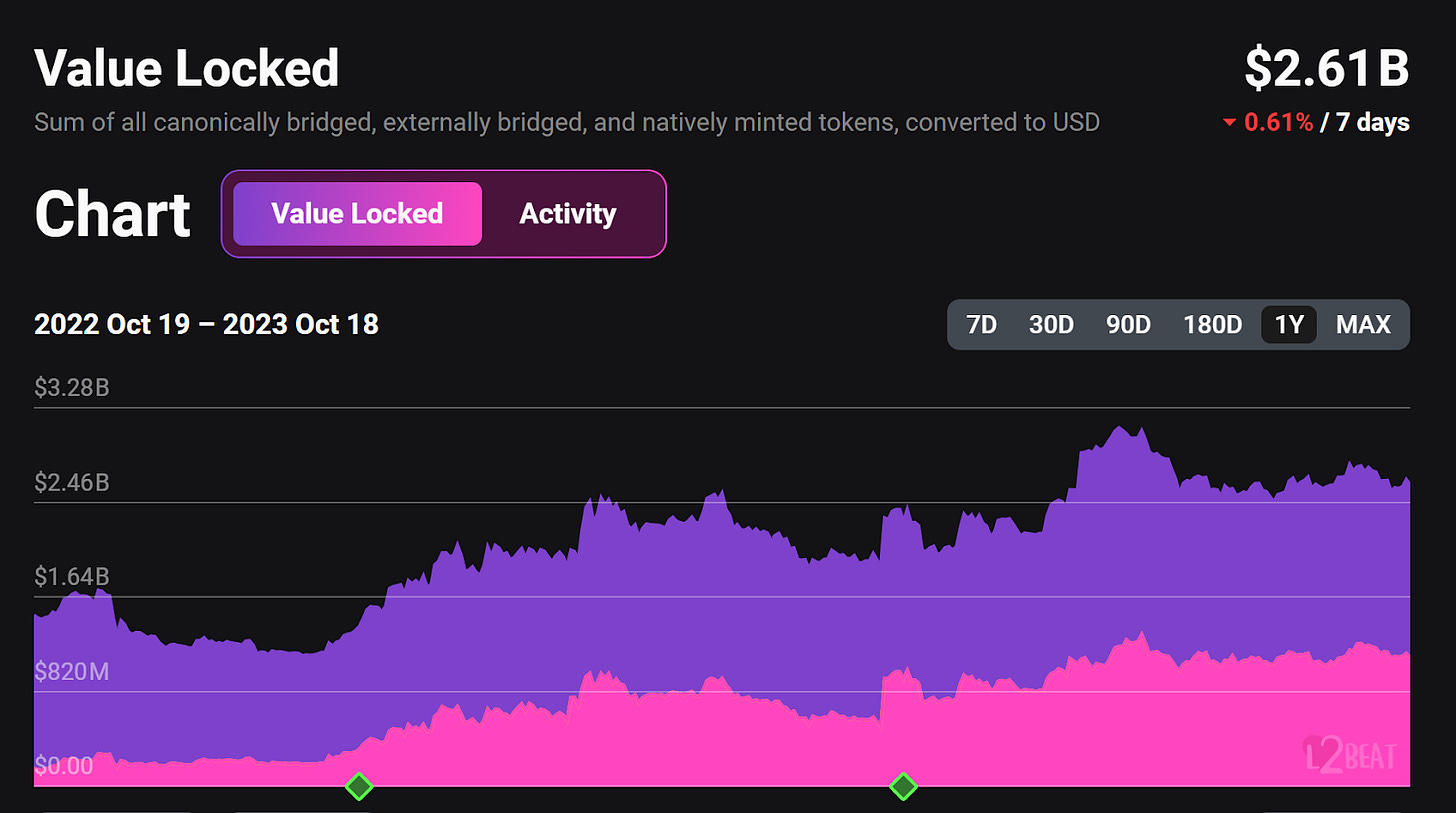

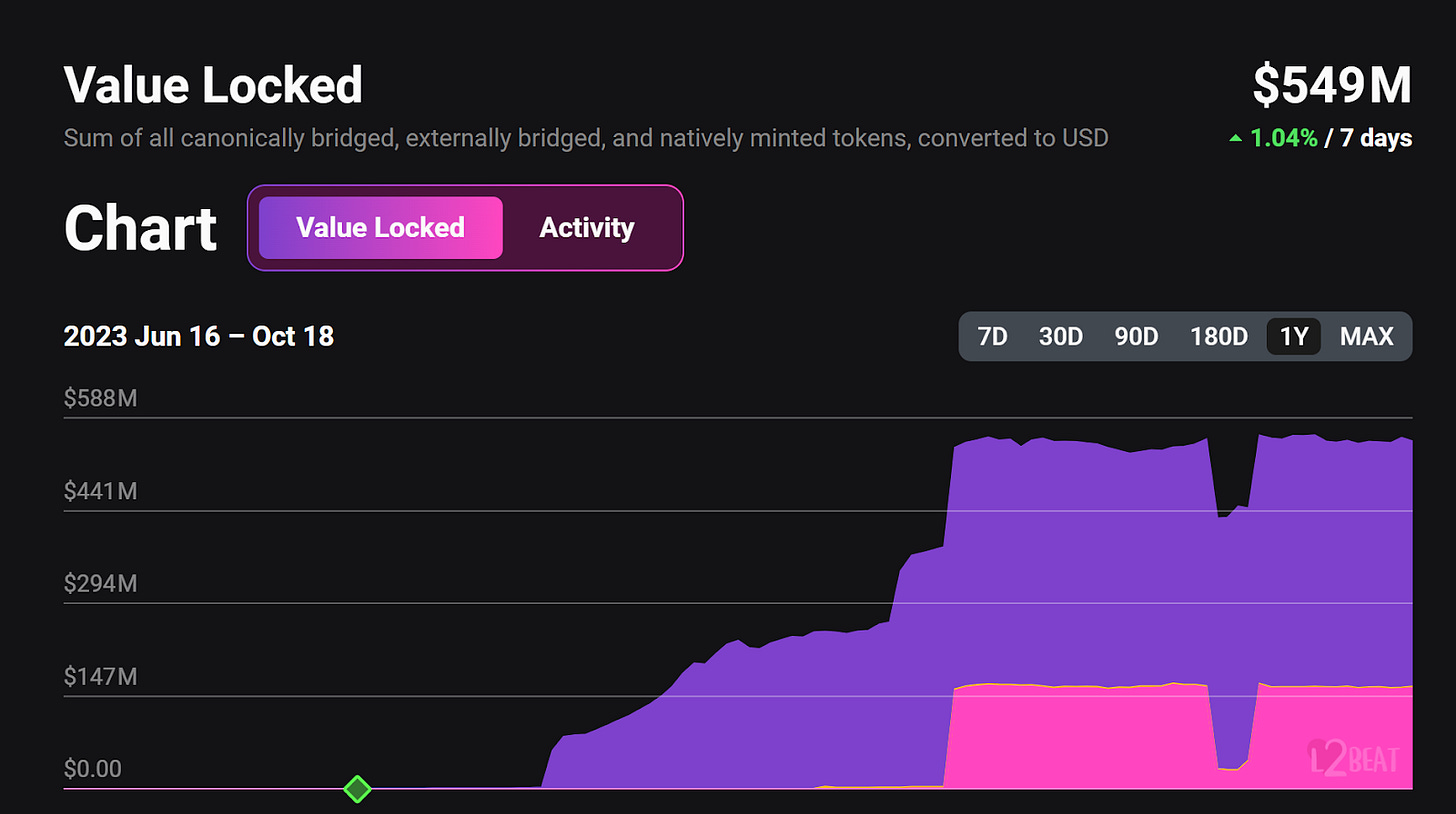

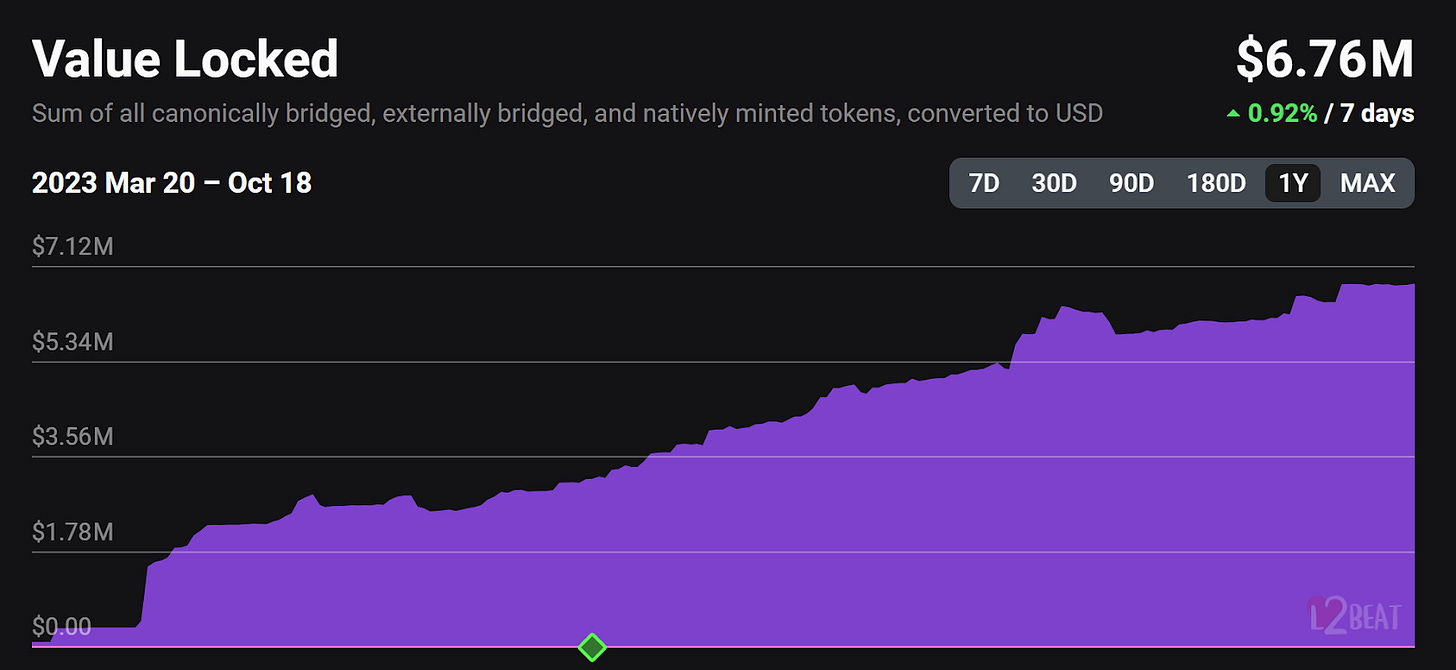

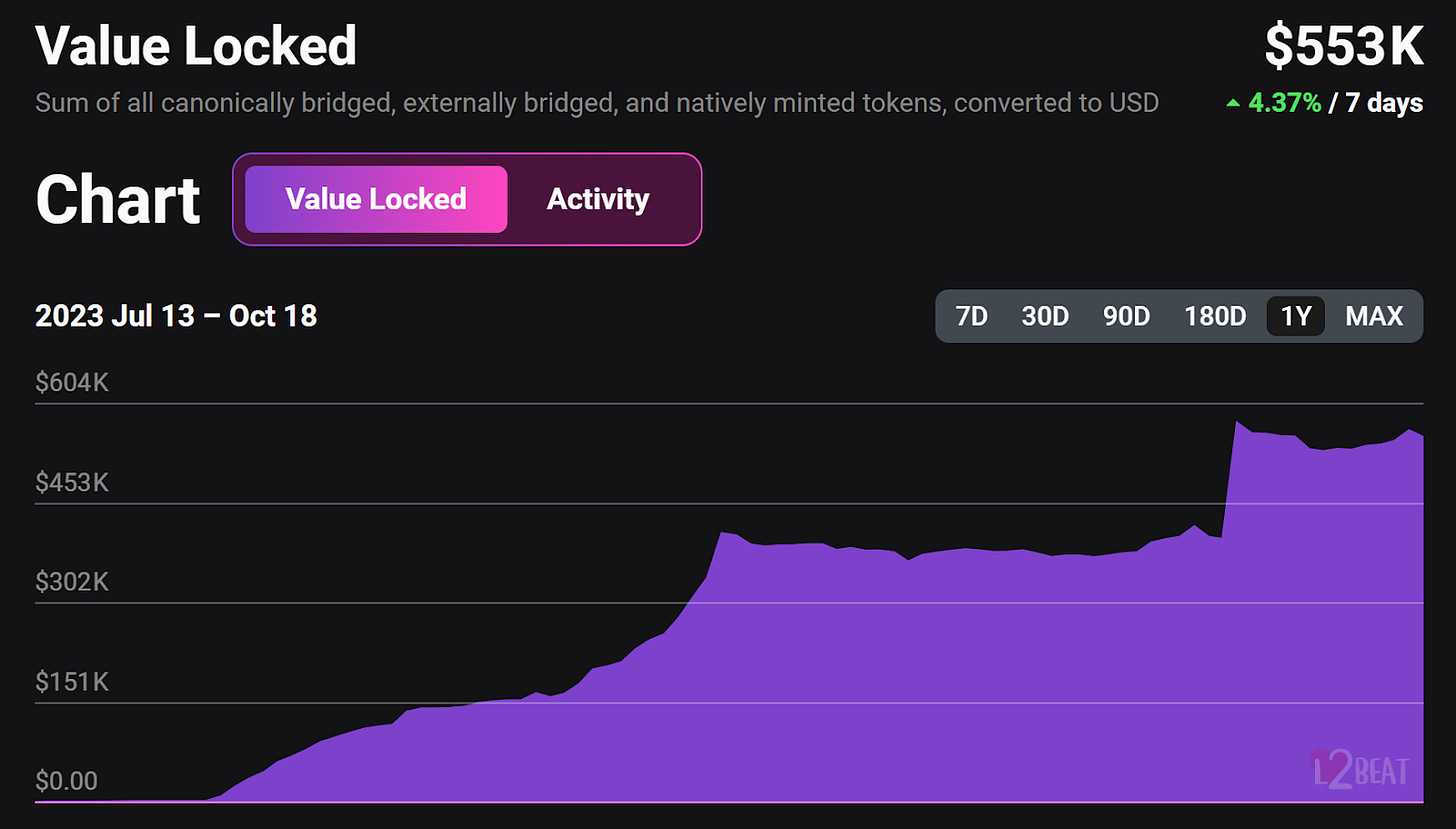

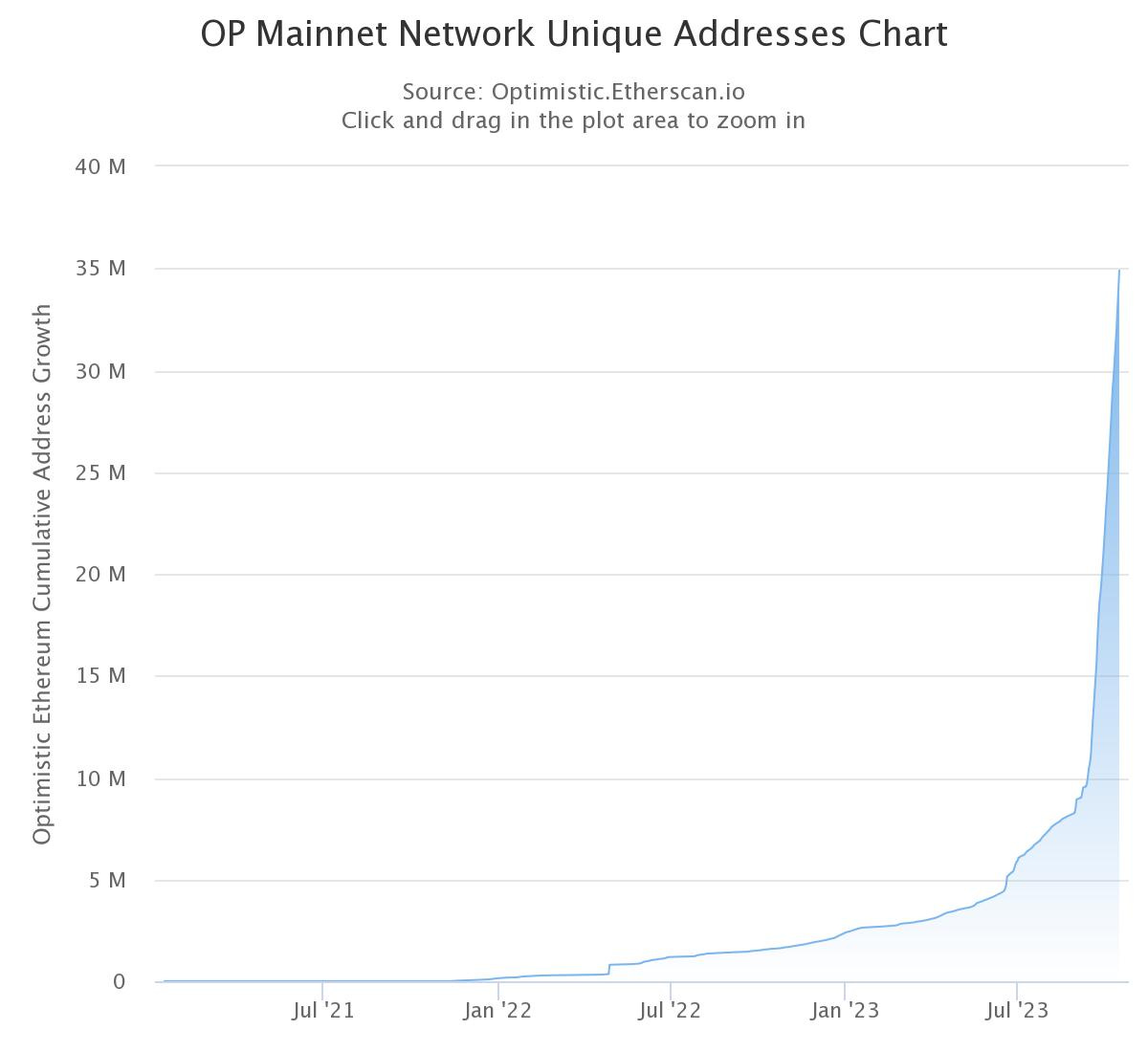

Optimism is an innovative scaling solution designed to address Ethereum's scalability challenges through the creation of an optimistic rollup. The chain has been in development since 2018, launched its token in 2022 and attracted more than $595M in TVL on its ecosystem.

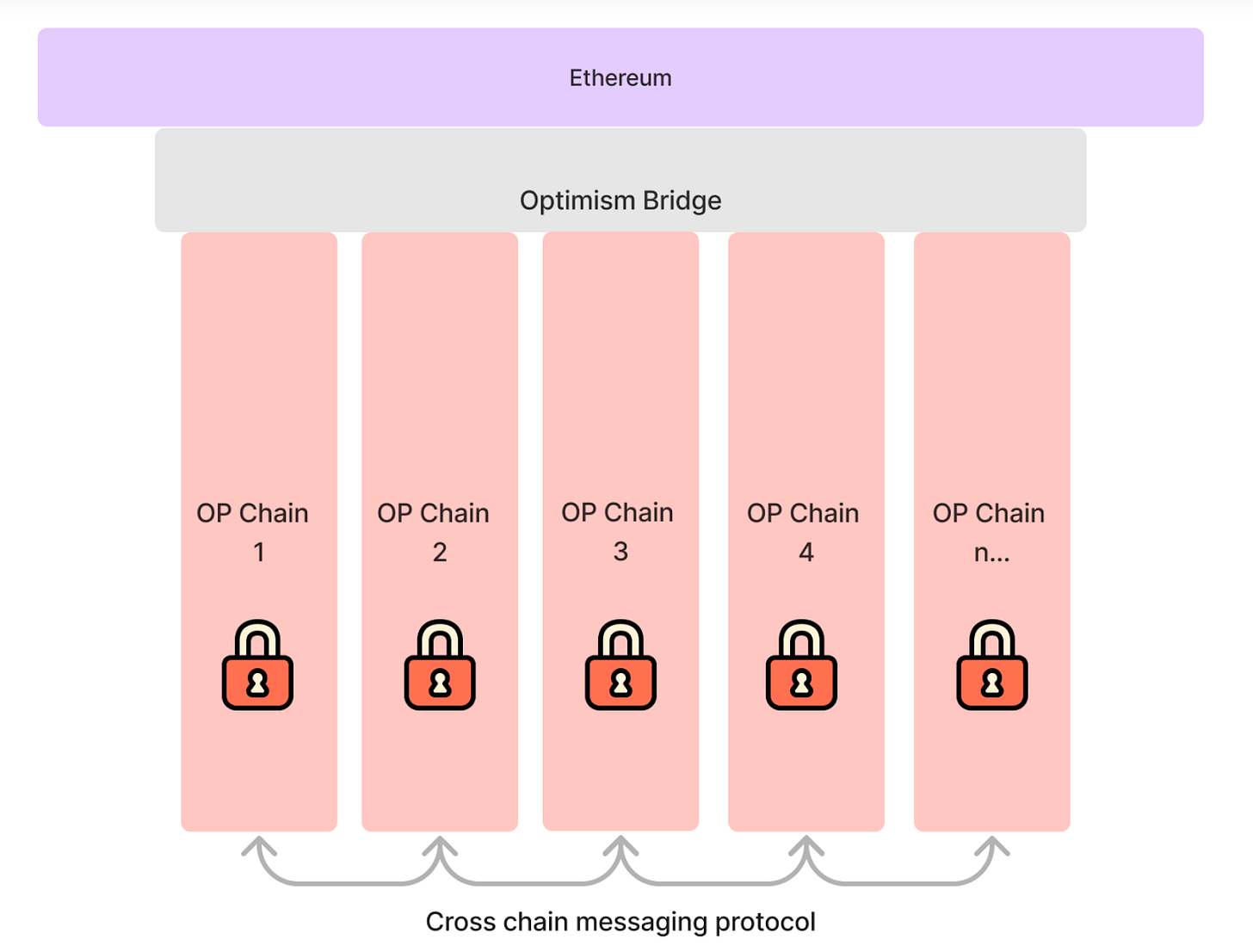

In the form of the OP Stack, Optimism provides a highly versatile and customizable framework to abstract complexity for builders that want to spin up (application-specific) rollups. In the context of the superchain vision, these rollups will all be combined into an interoperable ecosystem of chains that will provide the end user with a UX reminiscent of a monolithic, logical chain. This seamless interoperability will be enabled by shared sequencing and cross-rollup message passing protocols.

Thanks to the high degree of EVM equivalence, Optimism enables the reuse of code, tooling and infrastructure from Ethereum L1, thereby offering developers and users an experience akin to Ethereum's mainnet and enabling seamless migration of Solidity contracts, all while providing high throughput and low cost. Moreover, the versatility & customizability the Bedrock implementation of the OP stack offers, enables builders to address some of the core issues rollup-based scaling solutions face today, allowing them to customize every layer of the tech stack from sequencer to data availability solution and settlement layer.

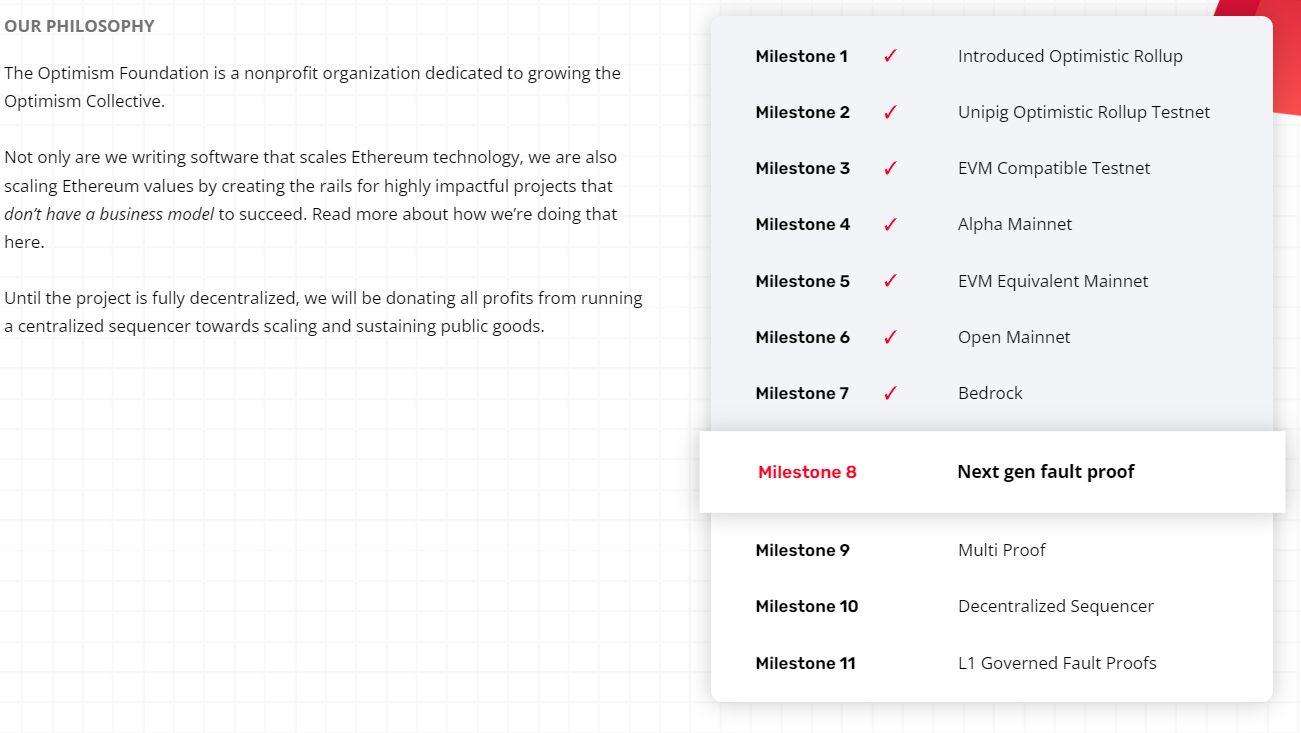

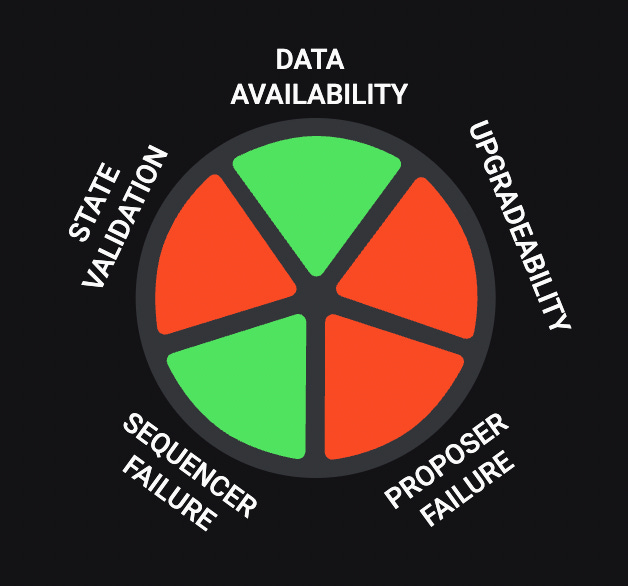

However, both rollups in general as well as Optimism and the OP Stack in specific are very nascent technologies and are still in an early stage of development. This is evidenced by the current lack of a state validation mechanism in the mainnet implementation of Optimism’s OP mainnet and other OP Stack based chains as well as in the inexistence of a mechanism to address proposer failure. Additionally, the upgradability of the rollup contract through fast upgrade keys and the currently centralized sequencer introduce additional risk from a user perspective and centralize control over the network. However, there is an ambitious roadmap in place that aims to address these issues and take off the training wheels as the Optimism and the OP stack continue to evolve.

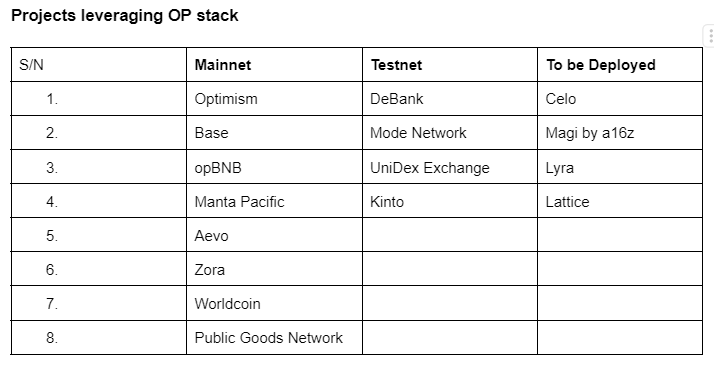

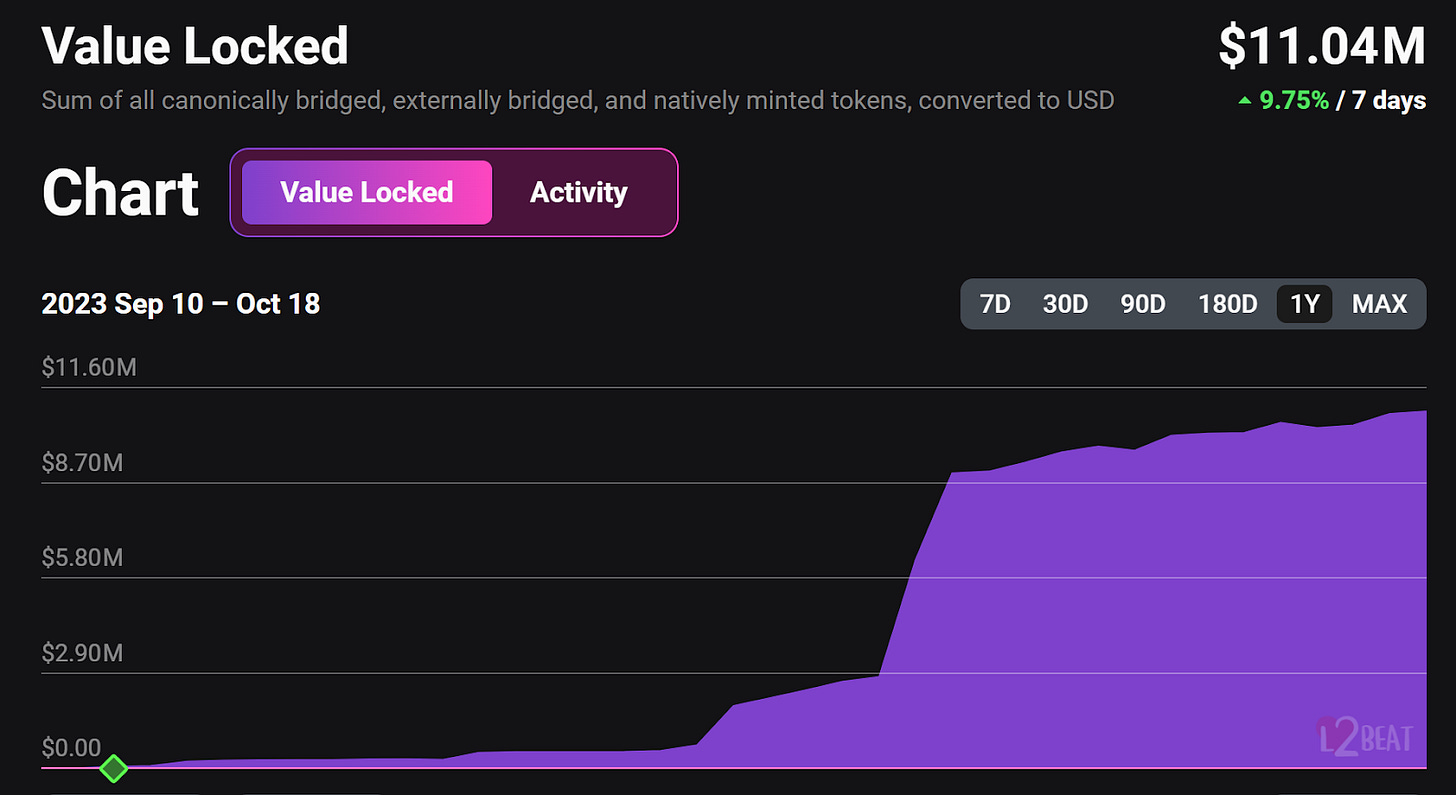

The product-market fit of the technology is showcased by the traction the OP stack has been gaining over the course of the past months, resulting in a fast-growing ecosystem of OP stack based chains that will form the backbone of the Superchain in the future.

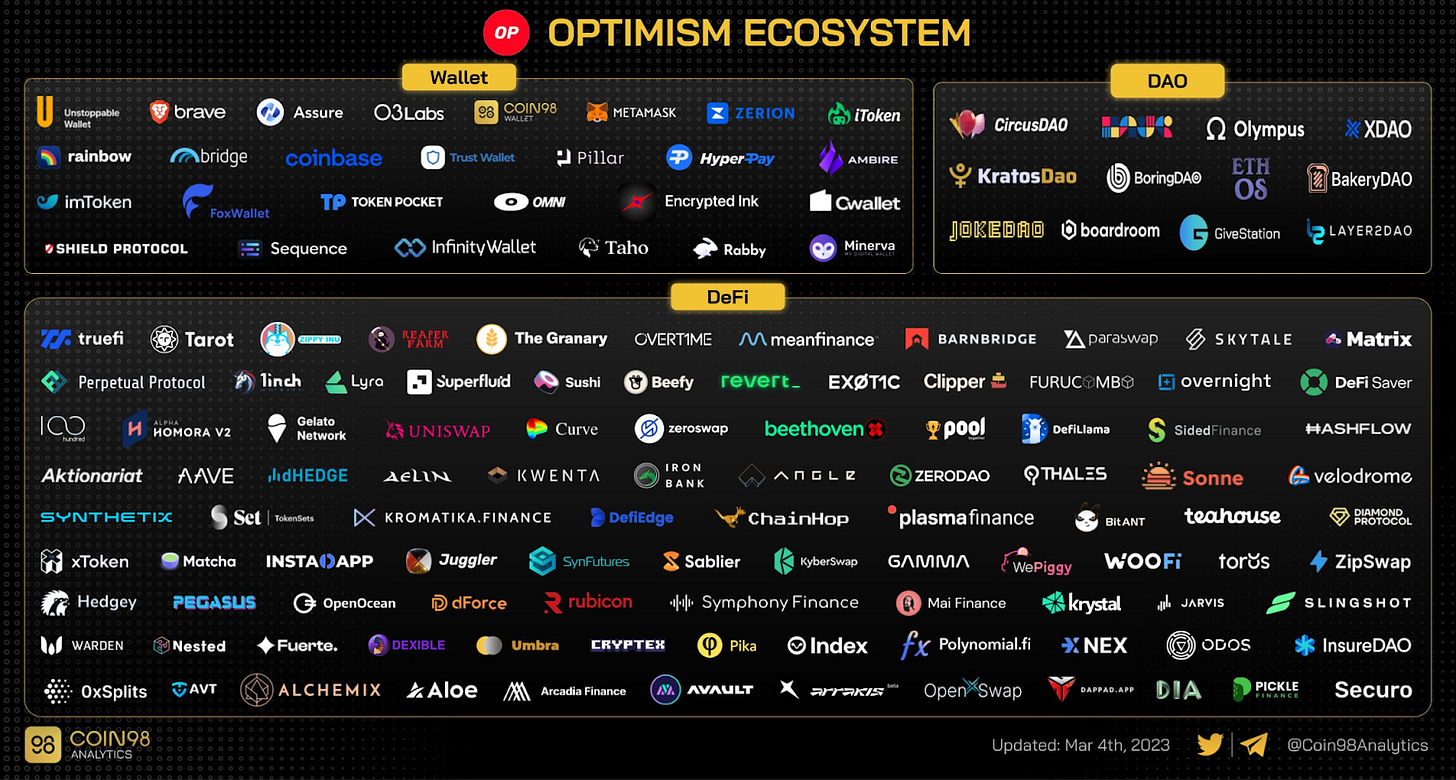

Aside from the technological standpoint, Optimism successfully developed an ecosystem with hundreds of dApps that attracted users, developers and bright teams. The development of this ecosystem has played a crucial role in establishing Optimism as a leading Layer 2 solution within the industry.

Introduction

Aiming to solve Ethereum’s scalability problem, Optimism’s OP Mainnet was one of the first general purpose EVM rollups to go live on mainnet. Since its launch, Optimism has consistently been at the forefront of L2 innovation, evolving into an ambitious vision of an interoperable ecosystem of L2s based on the OP stack termed the “Superchain”. This shared technological foundation of OP Mainnet and its fast growing ecosystem is what provides the basis for this Superchain vision to become reality, shaping the future of a more scalable Ethereum ecosystem.

Based on hundreds of hours of in-depth research, this report breaks down the Optimism ecosystem, exploring the technology behind Optimism and the Superchain vision, its unique features, and how it sets itself apart from other L2 solutions.

Whether you're a user or a builder, whether you’re a blockchain enthusiast already deeply familiar with the underlying technology or simply want to learn about the latest developments in the industry, this report is guaranteed to provide you valuable insights into the past, present and future of scaling Ethereum and Optimism’s optimistic rollup tech in specific.

The report walks you through the history of scaling, explaining in detail how we got from a monolithic Ethereum to a rollup-centric vision for Ethereum 2.0 that will move users and on-chain activity from L1 to highly scalable rollup-based execution layers on L2. We introduce you to the modular thesis and dive deep into rollup mechanics and limitations, as well as potential solutions to overcome the obstacles this nascent scaling technology is facing. Subsequently, the OP Stack and the Superchain vision are broken down in a digestible way and covered in-depth to enable you to stay ahead of the curve. Finally, we have a look at network metrics, ecosystem, tokenomics, team, funding and financials to provide you a highly comprehensive assessment of the overall state of the ecosystem and serve you with actionable information.

History of Scaling

Scaling the throughput of monolithic blockchain networks while maintaining decentralization and network security has been a key problem that researchers and developers have been trying to solve for years. It is indisputable that to reach true “mass adoption”, blockchains need to be able to scale, meaning they have to be able to process a large amount of transactions quickly and at low cost. This consequently means that as more use cases arise and network adoption accelerates, the performance of the blockchain doesn’t suffer. Based on this definition, Ethereum lacks scalability.

With increasing network usage, gas prices on Ethereum have skyrocketed to unsustainably high levels, ultimately pricing out many smaller users from interacting with decentralized applications entirely. Examples include the BAYC land mint (leading to a surge of gas fees up to 8000 gwei) or the artblocks NFT drop (leading to a surge of gas fees to over 1000 gwei) - as a reference, gas sits at 6 gwei at the time of writing. Instances such as these gave alternative, more “scalable” L1 blockchains (i.e. Solana) a chance to eat into Ethereum’s market share. However, this also spurred innovation around increasing the throughput of the Ethereum network.

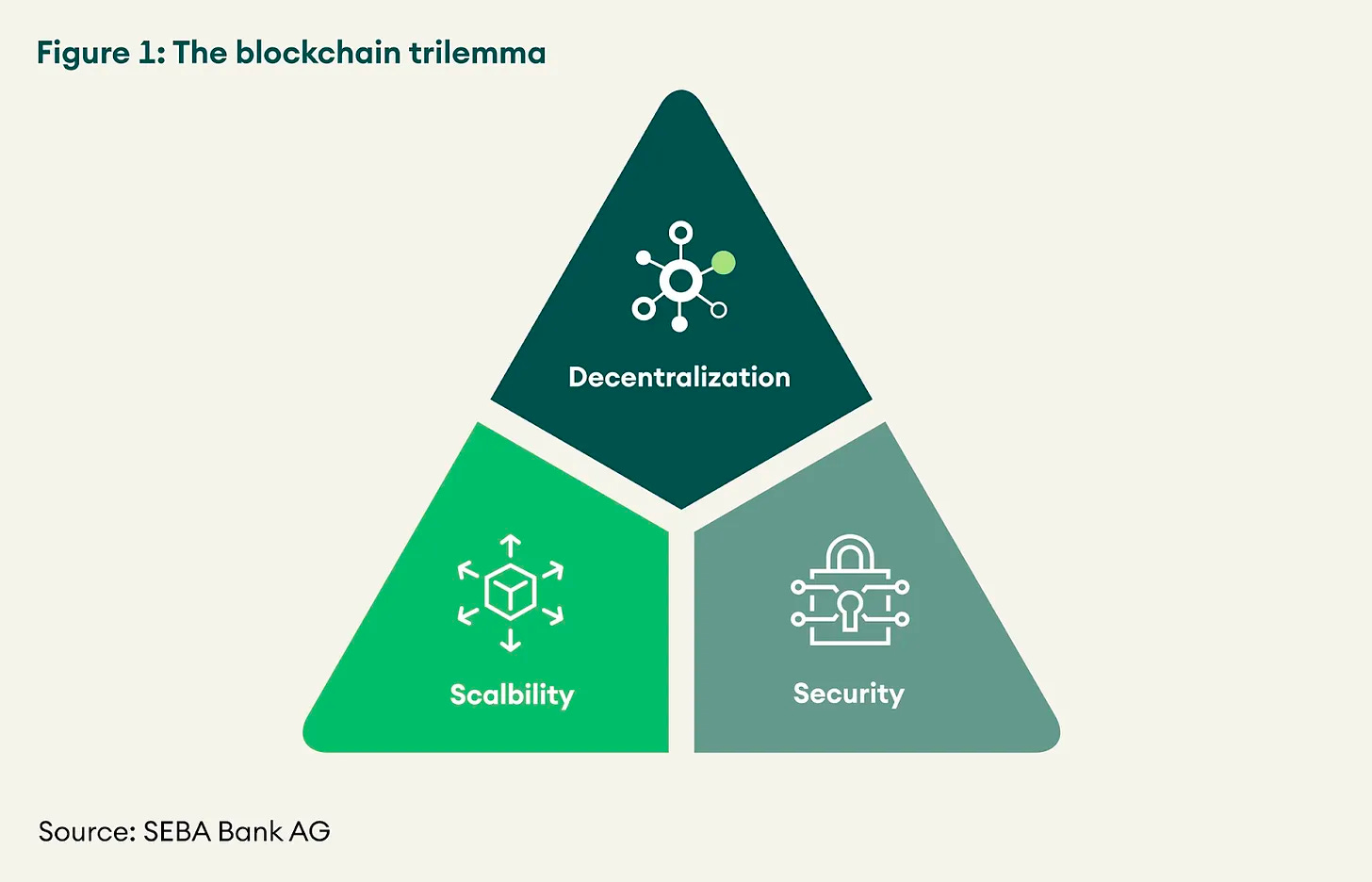

However, the scaling approaches these Alt-L1s are taking often come at the cost of decentralization and security. Alt-L1 chains like Solana for example have chosen to go with a smaller validator set and have increased hardware requirements for validators. While this improves the network’s ability to verify the chain and hold its state, it reduces how many people can actually verify the chain themselves and increases barriers of entry to do so. This conflict is also referred to as the blockchain trilemma (visualized below). The concept is based on the idea that a blockchain cannot reach all three core qualities that any blockchain network should strive to have (scalability, security & decentralization) all at once.

Since decentralization and inclusion are two core values of the Ethereum community and Ethereum is built on a culture of users being able to verify the chain, it is not very surprising that running the chain on a small set of high-spec nodes is not a suitable path for scaling Ethereum. Even Vitalik Buterin argues that it is “crucial for blockchain decentralization for regular users to be able to run a node”. As other ecosystems share this ethos, different scaling approaches gained traction.

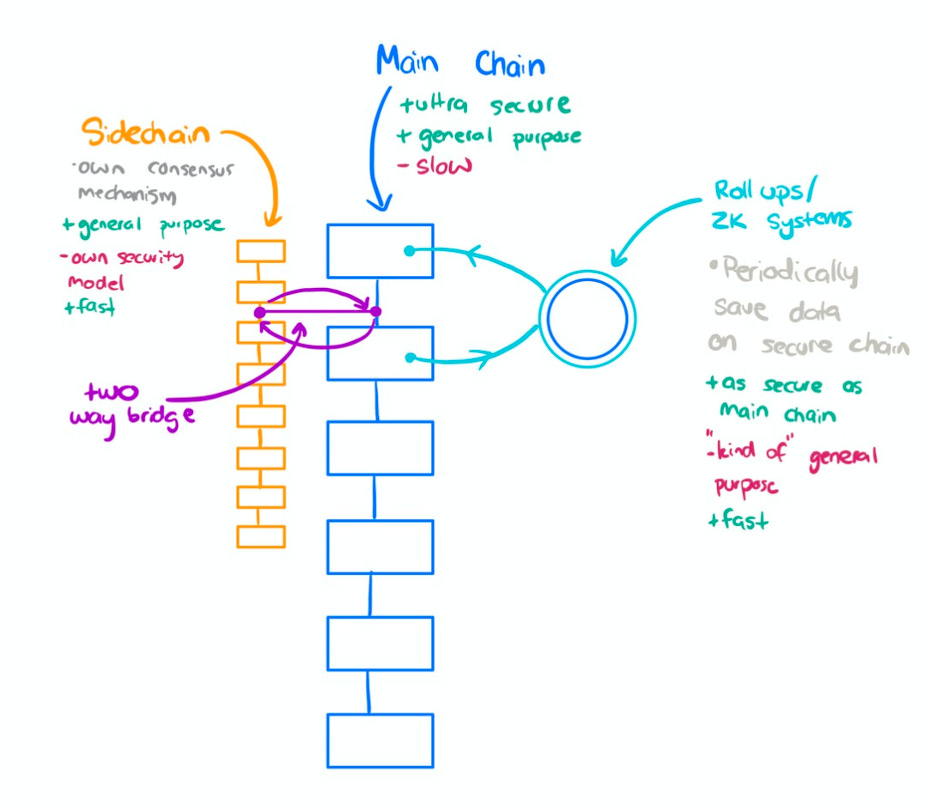

Sidechains, Plasma & State Channels

The idea behind side chains is to operate an additional blockchain in conjunction with a primary blockchain (Ethereum). This means the two blockchains can communicate with each other, facilitating the movement of assets between the two chains. A side-chain operates as a distinct blockchain that functions independently from Ethereum and links to Ethereum mainnet through a two-way bridge. Side-chains generally have their own block parameters and consensus algorithms, which are frequently tailored for streamlined transaction processing and increased throughput. However, utilizing a side-chain also means making a trade-off as it does not inherit Ethereum's security features.

In the unlikely event of a collusion scenario where the majority of validators engage in malicious activities, the community can still collaborate to redeploy the contracts on Ethereum and implement a fork that eliminates the malicious validators, enabling the chain to continue operating as intended.

One example of a side-chain or maybe rather a “commit chain”, is Polygon PoS. The network uses a checkpointing technique to increase network security in which a single Merkle root is periodically published to the Ethereum layer 1 but is otherwise basically a standalone network with its own proper validator set. This published state is referred to as a checkpoint. Checkpoints are important as they provide finality on the Ethereum chain. The Polygon PoS Chain contract deployed on the Ethereum layer 1 is considered to be the ultimate source of truth, and therefore all validation is done via querying the Ethereum main chain contract.

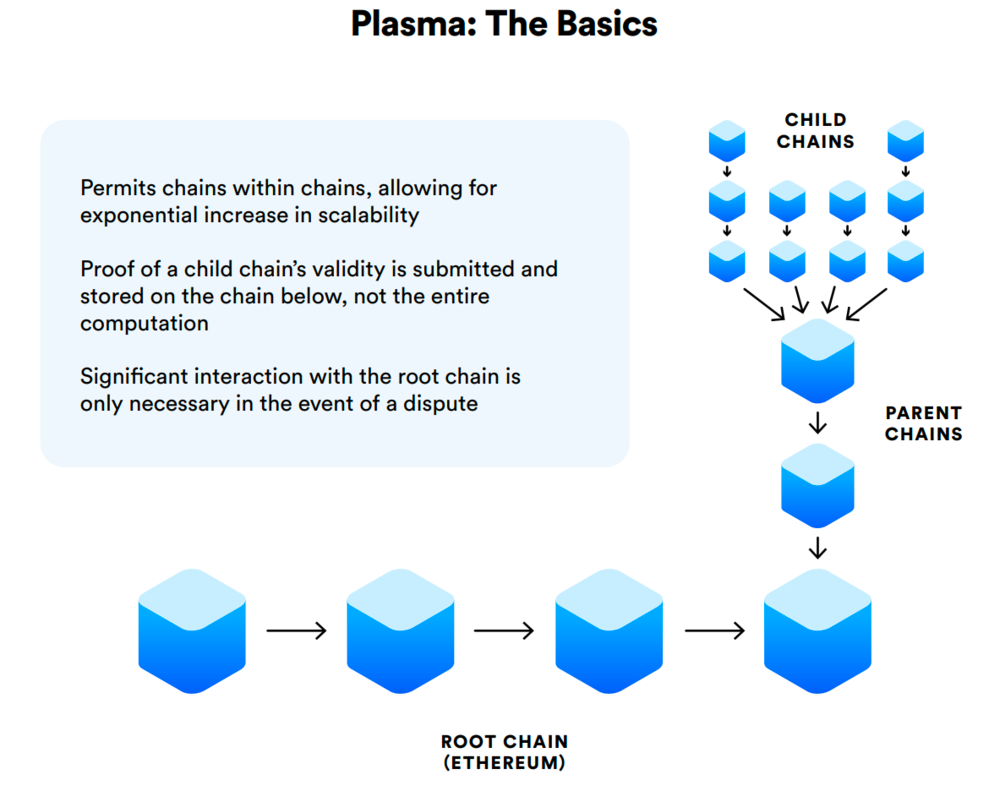

Similarly, plasma chains also utilize a proprietary consensus mechanism to generate blocks. However, unlike side-chains, the "root" of each plasma chain block is broadcasted to Ethereum. This is very similar to checkpointing on Polygon PoS but demands more communication with L1. The "root" in this context is essentially a small piece of information that enables users to demonstrate certain aspects of the L2 block's contents.

But while Polygon PoS has gained significant traction as outpriced users fled Ethereum in the search of lower transaction fees, the overall adoption of side-chains and plasma chains as a scaling technology has remained limited.

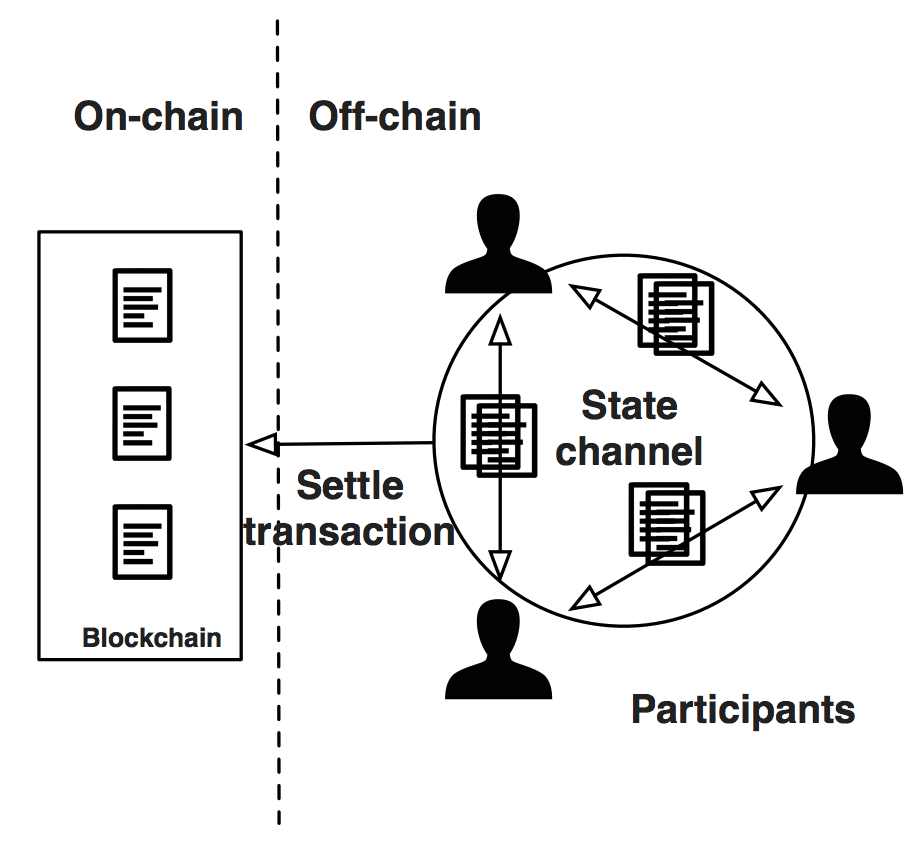

The same goes for a scaling approach referred to as state channels, that enables off-chain transactions between two or more parties. State channels allow participants to engage in a series of interactions, such as payments or game moves, without requiring each transaction to be recorded on the L1 blockchain.

The process begins with the creation of a smart contract on the Ethereum main chain. This contract includes the rules that govern the interactions and specifies the parties involved. Once the contract is established, the participants can open a state channel, which is an off-chain communication channel for executing transactions.

During the state channel's lifespan, the parties can engage in multiple interactions, updating the state of the contract through signed messages. The state changes are not immediately recorded on the blockchain, but they are verified by the smart contract, which can be enforced at a later time if necessary.

When the participants are finished with the interactions, they can close the state channel and publish the final state of the contract to the Ethereum main chain, which executes the contract and finalizes the transaction. This approach reduces the number of on-chain transactions needed to complete a series of interactions, allowing for faster and more efficient processing of transactions.

While state channels initially seemed like a promising solution for scaling blockchain networks like Ethereum, state channels require a certain level of trust between the participants and there is a considerable risk of disputes arising if one party fails to follow the rules outlined in the smart contract. Therefore, state channels are primarily well suited for interactions between parties who trust each other, which limits the number of use cases the technology can feasibly support. Consequently, adoption has remained low as other scaling technologies took the spotlight.

Homogenous Execution Sharding

While the Ethereum community was experimenting with side chains, plasma and state channels (which all have certain drawbacks that render them sub-optimal solutions), a scaling approach that many alternative L1 blockchains have chosen to take is referred to as homogenous execution sharding. This also seemed like the most promising solution to Ethereum’s scalability issues for quite some time and was the core idea behind the old Ethereum 2.0 roadmap.

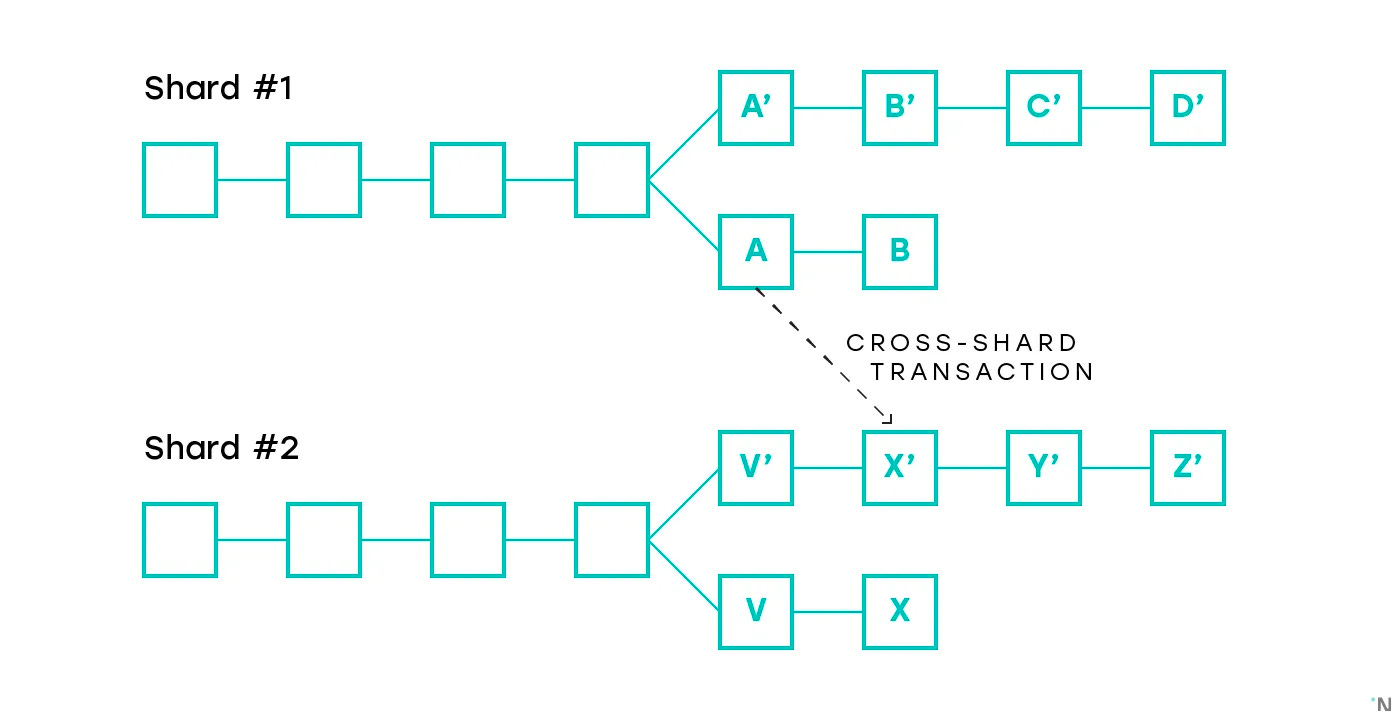

Homogeneous execution sharding is a blockchain scaling approach that seeks to increase the throughput and capacity of a blockchain network by splitting its transaction processing workload among multiple, smaller units (validator sub-sets) called shards. Each shard operates independently and in parallel, processing its own set of transactions and maintaining a separate state. The goal is to enable parallel execution of transactions across shards, thereby increasing the overall network capacity and speed. Examples of Alt-L1 chains that have opted for this approach are Harmony or Near.

Harmony is an L1 blockchain platform that aims to provide a scalable, secure, and energy-efficient infrastructure for decentralized applications (dApps). It uses a sharding-based approach in which the network is divided into multiple shards, each with its own set of validators who are responsible for processing transactions and maintaining a local state. Validators are randomly assigned to shards, ensuring a fair and balanced distribution of resources. The network uses a consensus algorithm called Fast Byzantine Fault Tolerance (FBFT), a variant of the Practical Byzantine Fault Tolerance (PBFT) consensus mechanism, to achieve fast and secure transaction validation across shards. Cross-shard communication is facilitated through a mechanism called "receipts," which allows shards to send information about the state changes resulting from a transaction to other shards. This enables seamless interactions between dApps and smart contracts residing on different shards.

Similarly, NEAR Network uses an execution sharding technology called Nightshade, that splits the work of processing transactions across many validator subsets. Nightshade utilizes block producers and validators to process transaction data in parallel across multiple shards so that each shard will produce a fraction of the next block (called a chunk). These chunks are then processed and stored on the NEAR main blockchain to finalize the transactions they contain.

This resembles the old Ethereum 2.0 roadmap, in which Ethereum would have consisted of a Beacon Chain and 64 shard chains. The Beacon Chain was designed to manage the PoS protocol, validator registration, and cross-shard communication. The shard chains on the other hand were thought of as individual chains responsible for processing transactions and maintaining separate states in parallel. Validators would have been assigned to a shard and would rotate periodically to maintain the security and decentralization of the network with the Beacon Chain keeping track of validator assignments and managing the process of finalizing shard chain data. Cross-shard communication was planned to be facilitated through a mechanism called "crosslinks," which would periodically bundle shard chain data into the Beacon Chain, allowing state changes to be propagated across the network. However, the Ethereum 2.0 roadmap has since evolved, and execution sharding has been replaced by an approach referred to as data sharding that aims to provide a scalable basis for a more complex scaling technology known as rollups (more on this later on).

But while homogenous execution sharding promises great scalability, it does come at the cost of security trade-offs, as the validator is split into smaller subsets and hence the network decentralization is impaired. Additionally, the value at stake that provides crypto-economic security on the shards is reduced. This is part of the reason why execution sharding is not the approach that Ethereum takes to solve the scalability problem in the current (rollup-centric) roadmap of Ethereum 2.0.

Heterogenous Execution Sharding

Heterogeneous execution sharding is a blockchain scaling approach that connects multiple, independent blockchains with different consensus mechanisms, state models, and functionality into a single, interoperable network. This approach allows each connected blockchain to maintain its unique characteristics while, depending on the design, benefiting from the security and scalability of the overall ecosystem. Two prominent examples of projects that employ heterogeneous execution sharding are Polkadot (v1) and Cosmos.

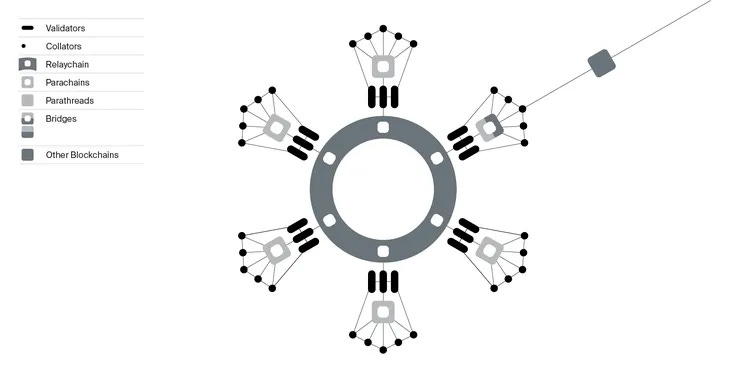

Polkadot (v1) is a decentralized platform designed to enable cross-chain communication and interoperability among multiple blockchains. Its architecture consists of a central Relay Chain, multiple Parachains, and Bridges.

Relay Chain: The main chain in the Polkadot ecosystem, responsible for providing security, consensus, and cross-chain communication. Validators on the Relay Chain are in charge of validating transactions and producing new blocks.

Parachains: Independent blockchains that connect to the Relay Chain to benefit from its security and consensus mechanisms, as well as enable interoperability with other chains in the network. Each parachain can have its own state model, consensus mechanism, and specialized functionality tailored to specific use cases.

Bridges: Components that link Polkadot to external blockchains (like Ethereum) and enable communication and asset transfers between these networks and the Polkadot ecosystem.

Polkadot uses a hybrid consensus mechanism called Nominated Proof-of-Stake (NPoS) on the Relay Chain to secure its network. Validators on the Relay Chain are nominated by the community, and they, in turn, validate transactions and produce blocks. Parachains can use different consensus mechanisms run by what is referred to as collators, depending on their requirements. What is an important feature of Polkadot’s network architecture is that by design, all parachains share security with the relay chain, hence inheriting the Relay Chain security guarantees.

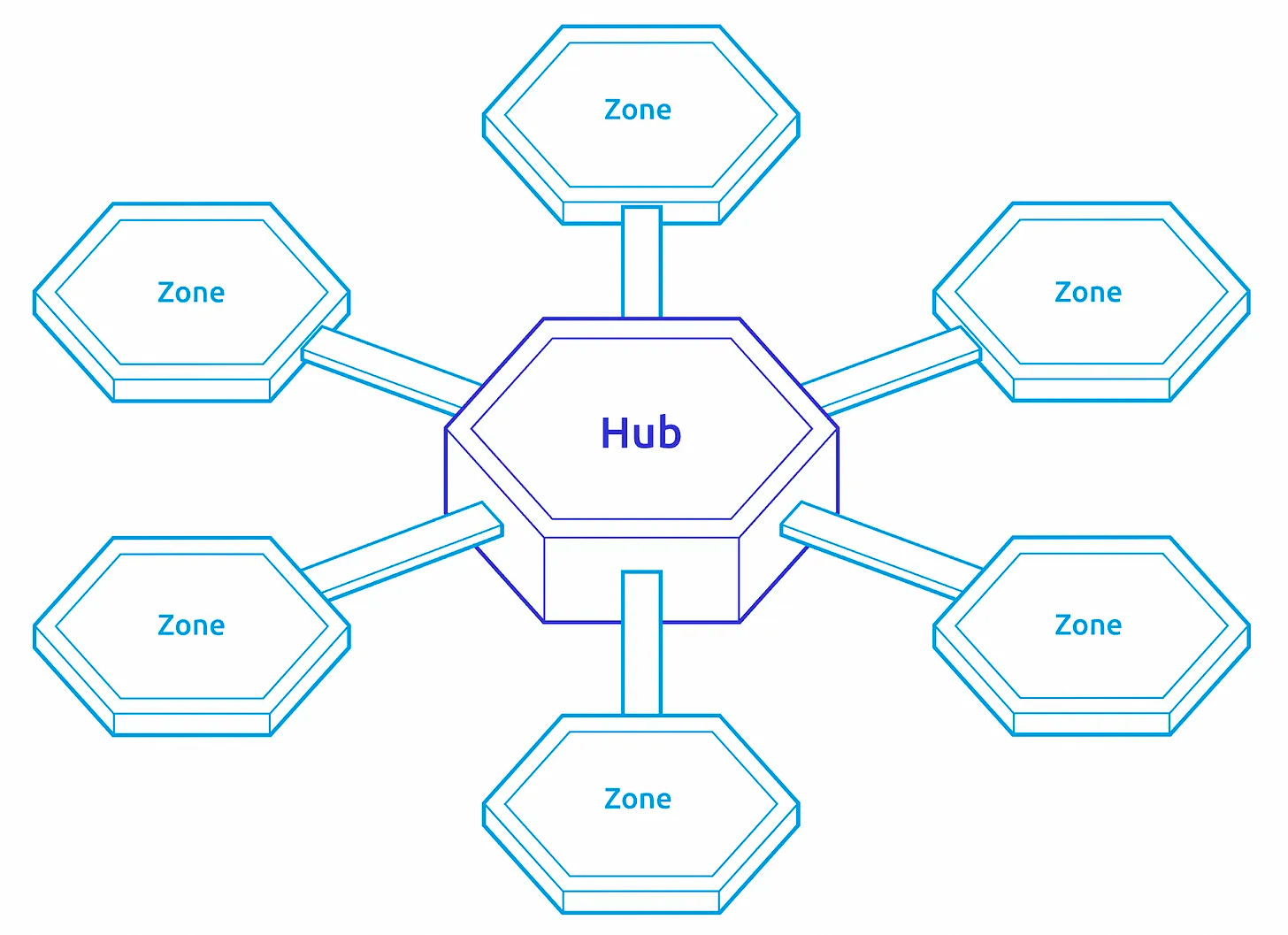

Similarly, Cosmos aims to create an Internet of “Blockchains”, facilitating seamless communication and interoperability between different application-specific blockchains. Its architecture is kind of similar to Polkadot’s and is composed of a central Hub, multiple Zones, and Bridges.

Hub: The central blockchain in the Cosmos ecosystem enabling cross-chain communication and soon inter-chain security (shared security similar to Polkadot). Cosmos Hub uses a Proof-of-Stake (PoS) consensus mechanism called Tendermint, which offers fast finality and high throughput. Theoretically, there can be multiple hubs. But especially with ATOM 2.0 and inter-chain security coming up, the Cosmos Hub will likely remain the center of the Cosmos-enabled internet of blockchains.

Zones: Independent blockchains connected to the Hub, each with its own consensus mechanism, state model, functionality and generally also validator set. Zones can communicate with each other through the Hub using a standardized protocol called Inter-Blockchain Communication (IBC).

Bridges: Components that link the Cosmos ecosystem to external blockchains, allowing asset transfers and communication between Cosmos Zones and other networks.

The main difference between the Polkadot and the Cosmos approach is the security model. While Cosmos goes for an approach in which the app-specific chains (heterogenous shards) have to spin up and maintain their own validator sets, Polkadot opts for a shared security model. Under this shared security model, the app-chains inherit security from the relay chain that stands at the center of the ecosystem. The latter is much closer to the rollup-based scaling approach that Ethereum wants to take to enable scaling.

Modularity & Rollup-based Scaling

The Modular Thesis

As we have seen, building decentralized applications that are sovereign & unconstrained by the limitations of base layers is a complex endeavor. It requires coordinating hundreds of node operators, which is both difficult & costly. Moreover, it is hard to scale monolithic chains without making significant tradeoffs on security and decentralization.

While frameworks such as the Cosmos SDK and Polkadot’s Substrate make it easier to abstract certain software components, they don’t allow for a seamless transition from code into the actual physical network of p2p hardware. Moreover, heterogenous sharding approaches might fragment ecosystem security, which can introduce additional friction & risk. So what is the alternative? Can we solve the blockchain trilemma by taking a different approach?

The answer is: Yes! This is exactly where the before-mentioned rollups come to the rescue! But before we dive into what rollups are and how they work, let’s have a look at the bigger picture. Rollups sit at the core of what is often referred to as the “Modular Thesis”, which is basically the antithesis to monolithic scaling.

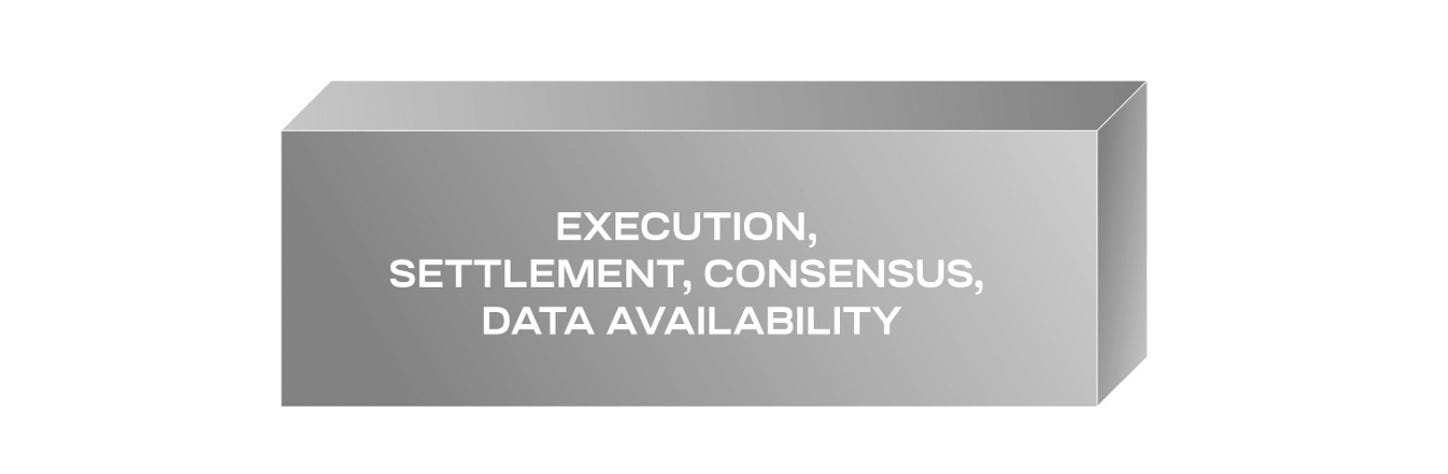

Modular blockchain design is a broad approach that separates a blockchain’s core functions (execution, settlement & consensus/DA) into distinct, interchangeable components. Within these functional areas, specialized providers arise that jointly facilitate building scalable and secure rollup execution layers, broad app design flexibility, and enhanced adaptability for evolving technological demands.

In monolithic networks on the other hand (e.g. the before-mentioned Ethereum, Solana, Harmony or Near) execution, settlement & consensus/DA are unified in one layer. Let’s unpack what these terms mean:

Data Availability: The concept that data that is published to a network that is accessible and from where data retrievable by all network participants (at least for a certain time).

Execution: Defines how nodes on the blockchain process transactions to transition the blockchain between states.

Settlement: Finality (probabilistic or deterministic) is a guarantee that a transaction committed to the chain is irreversible. This only happens when the chain is convinced of transaction validity. Hence, settlement means to validate transactions, verify proofs & arbitrate disputes.

Consensus: The mechanism by which nodes come to an agreement about what data on the blockchain can be verified as true & accurate.

But while this monolithic design approach has some advantages of its own (e.g. with regards to reduced complexity & simple composability), we have already covered why it doesn't necessarily scale well. Consequently, modularists strip these things apart and have them done by separate, specialized layers.

The modular design space hence consists of:

Execution layers (rollups)

Settlement layers (e.g. Ethereum)

Consensus/DA layers (e.g. Ethereum or Celestia)

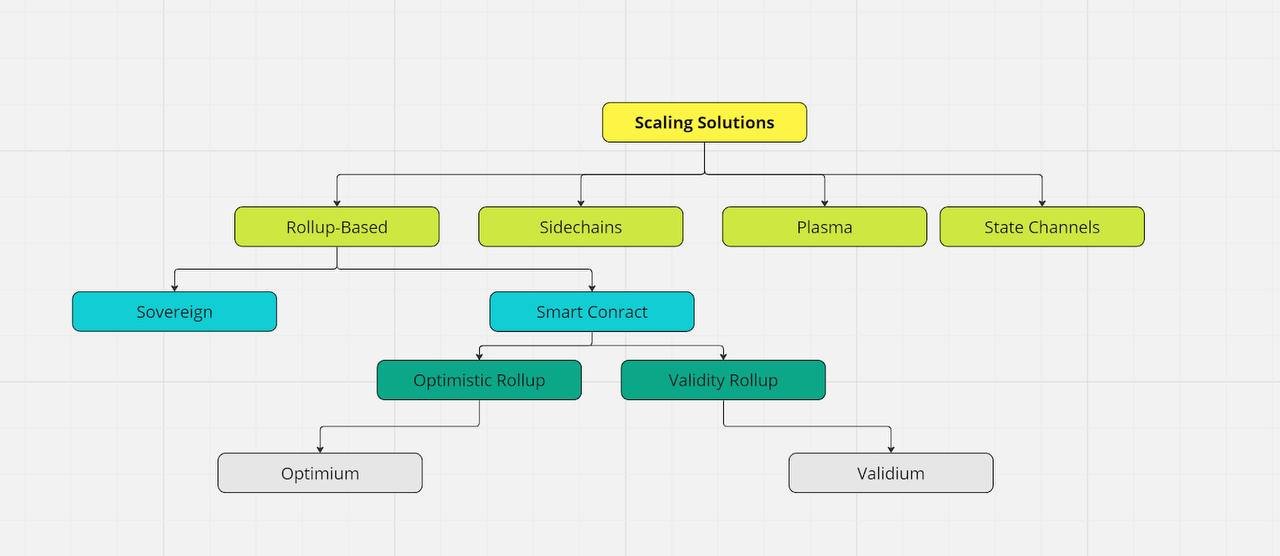

By separating these functions we can create a landscape in which rollups can pick and choose the ideal components for their use case, enabling a high degree of use case tailored customization. This enables a broad design space for rollup-based scaling solutions. Depending on the exact definition the term can be quite broad, including smart contract rollups, sovereign rollups as well as Validiums/Optimiums.

Smart Contract Rollups: Smart Contract Rollups are a type of blockchain that publish their entire blocks to a settlement layer like Ethereum. The settlement layer’s primary functions are to order the blocks and verify the correctness of the transactions.

Sovereign Rollups: Sovereign Rollups are a type of blockchain that publishes its transactions to another blockchain, usually for data availability purposes, but handles its own settlement. Unlike Smart Contract Rollups, Sovereign Rollups do not use a settlement layer to determine their canonical chain and validity rules. The canonical chain of the Sovereign Rollup is determined by the nodes in the rollup's peer-to-peer network.

Validiums: Validiums are scaling solutions similar to “pure” rollups, but designed to improve throughput by processing transactions off the Ethereum Mainnet and specifically using an off-chain data availability solution. Similar to validity rollups, Validiums publish validity (zero-knowledge) proofs on-chain to verify off-chain transactions. However, they keep the data off-chain, which (depending on the design) can be posted to a committee of trusted parties or a separate chain.

Optimiums: Same as Validiums but using an optimistic state validation mechanism.

Rollup Mechanics

Before diving into the rollup mechanics, let’s have a look at the network participants. Depending on the exact implementation, a rollup can have various actors involved. Generally though there are three main actors which are defined below.

Light clients: Only receive the block headers and do not download nor process any transaction data (else it would fulfill the tasks of the full node). Light clients can check state validity via fault proofs, validity proofs or data availability sampling.

Full nodes: Retrieve complete sets of rollup block headers and transaction data. They handle and authenticate all transactions to compute the rollup's state and ensure the validity of every transaction. If the full nodes find an incorrect transaction in a block the transaction is rejected and omitted from the block.

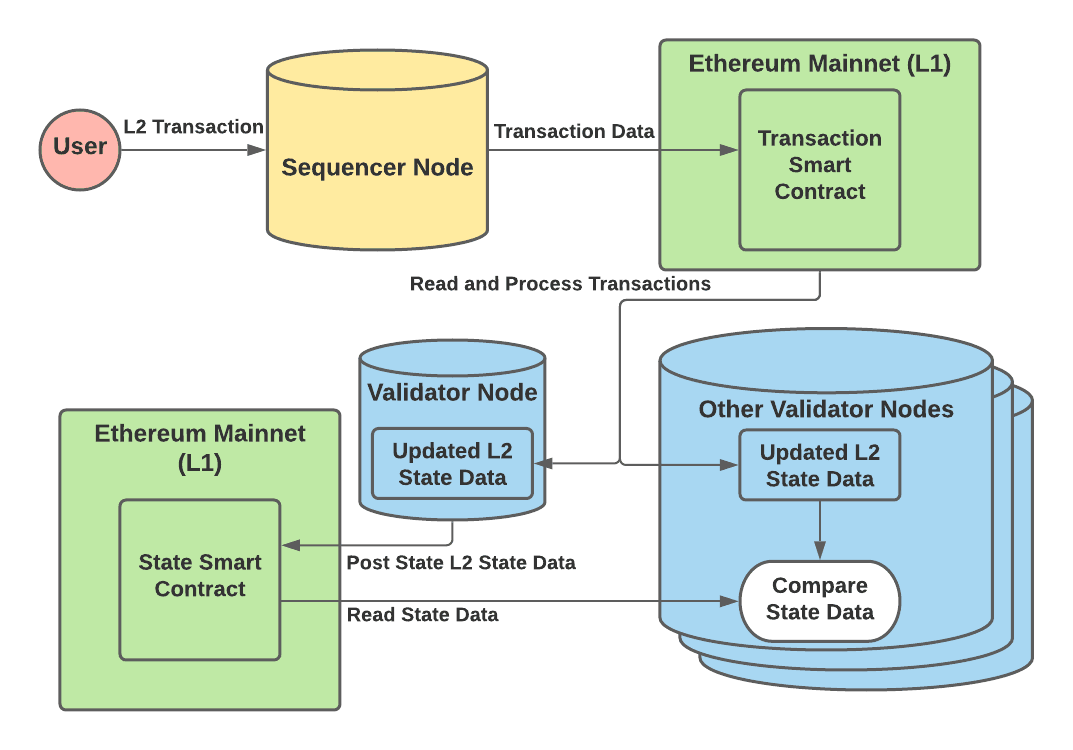

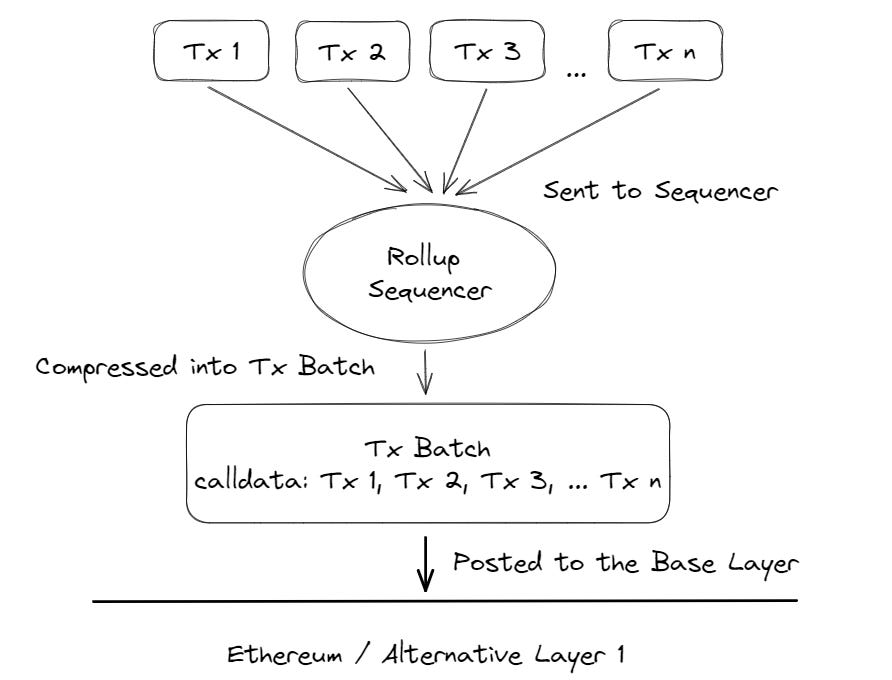

Sequencers: Specific rollup node with the primary tasks of batching transactions and generating new blocks for the rollup chain.

The two core principles that underlie the concept of rollups are:

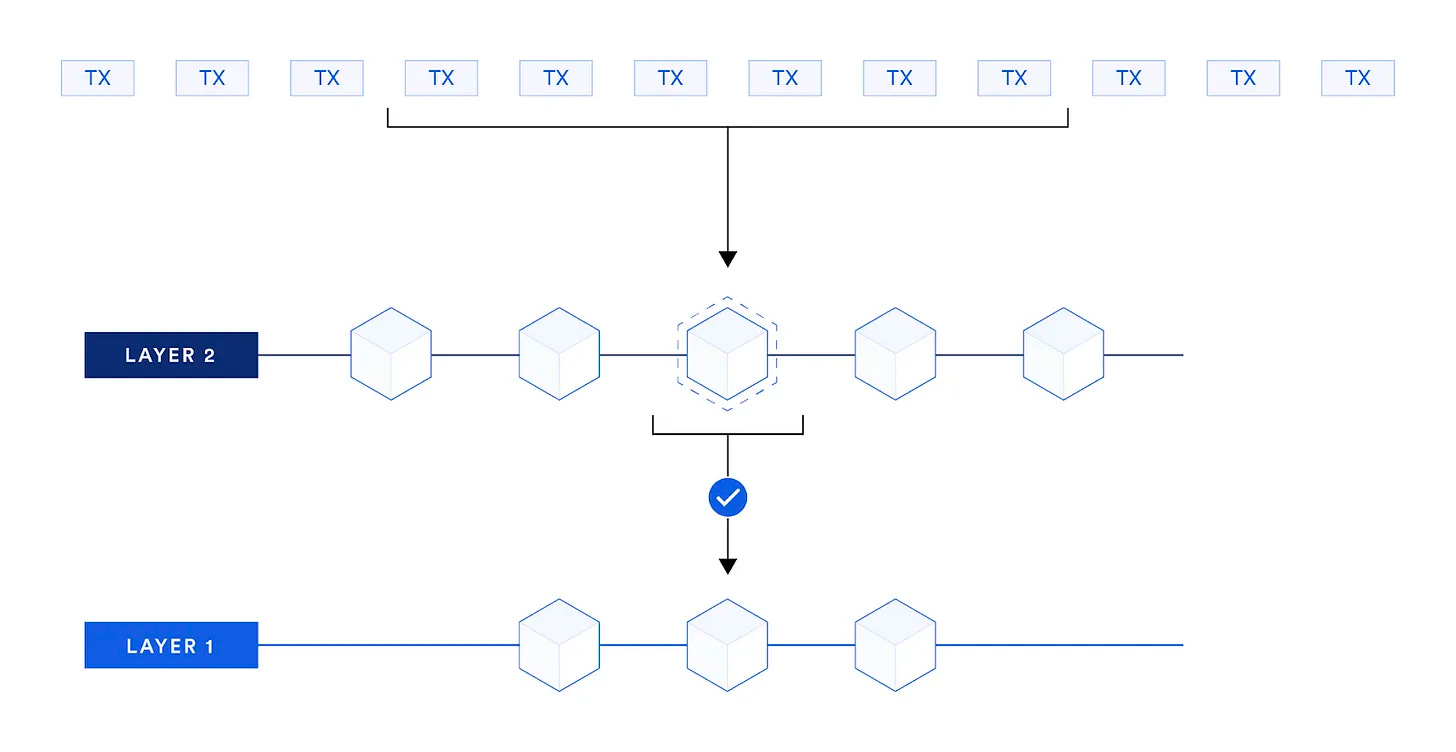

Aggregation: Rollup nodes collect multiple transactions and create a compressed summary, known as a rollup block, which contains the essential information needed for transaction verification and state updates.

Verification: These rollup blocks are then submitted to the main blockchain, where validator nodes verify the validity of the transactions within the block and ensure that they comply with the predefined rules.

Once the block is validated, the state of the rollup is updated on-chain, reflecting the outcome of the transactions. This allows rollups to achieve significant scalability improvements by reducing the computational transaction load and hence blockspace demand on the base layer blockchain while still being able to maintain secure & trustless transaction processing that is rooted in the main chain’s security guarantees. This effectively means moving transaction processing off-chain, while keeping the resulting state on-chain. The amount of data posted to the L1 can be further reduced using compression tricks such as superior encoding, omitting the nonce, replacement of 20-byte addresses with an index and more.

So to put this into context with the scaling solutions discussed previously, rollups basically take heterogenous sharding within a shared security paradigm (the Polkadot approach) to the next level. Transactions are processed off-chain and (as the name suggests) rolled up into batches. Sequencers collect transactions from the users and submit the data to a smart contract on Ethereum L1 that enforces correct transaction execution on the L2, storing the transaction data on L1. As hinted at before, this enables rollups to inherit the security of the battle-tested Ethereum base layer (abstracting the potential risk arising from sequencers and other actors in the system). What were essentially shards in the old Ethereum 2.0 roadmap are now completely decoupled from the base layer. This leaves devs with a wide open space to customize their L2 chain however they want (similar to Polkadot’s parachains or Cosmos’ zones), while still being able to rely on Ethereum L1’s security. Compared to side-chains or Cosmos zones, this comes with the advantage that a rollup does not need a validator set and consensus mechanism of its own. A rollup only needs to have a set of sequencers (responsible for transaction ordering), with only one sequencer needing to be live at any given time. With weak assumptions like this, rollups can actually run on a small set of high-spec server-grade machines or even a single sequencer, allowing for great scalability (which comes at the cost of decentralization and subsequently security though). However, most rollups try to design their systems as decentralized as possible (more on that later). While rollups generally don’t need a consensus mechanism (as finality guarantees come from L1 consensus where state validation happens) rollups can have coordination mechanisms with rotation schedules to rotate through a set of sequencers or fully fledged PoS mechanisms in which a set of sequencers reaches consensus on transaction inclusion & ordering, thereby increasing decentralization & improving security.

Optimistic vs. Validity Rollups

Generally, there are two types of rollup systems, which differ with regards to the state validation mechanism they use.

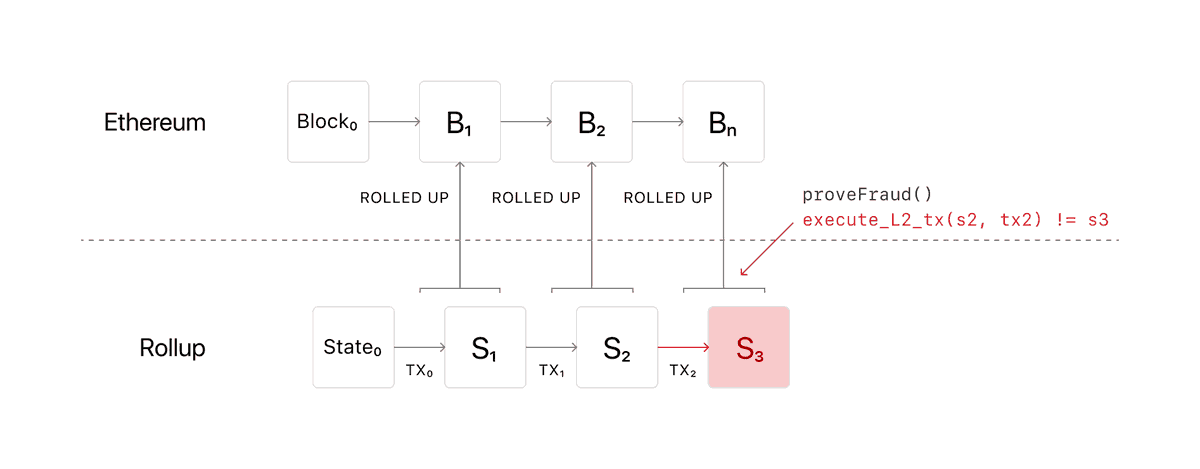

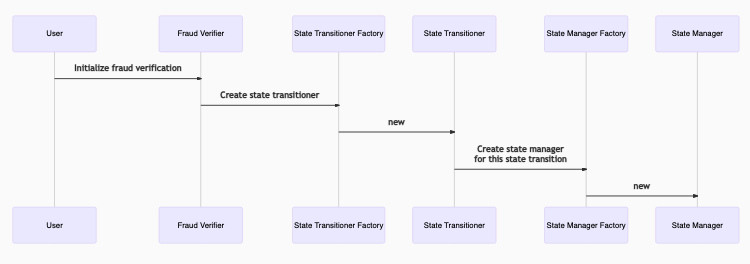

Aside from the approach to state validation, the mechanics of optimistic and validity rollups are quite similar. Both have a sequencer node that collects transactions from users, subsequently submitting this raw data to the DA layer (Ethereum L1 in most cases) alongside the new L2 state root. However, in order to ensure that the new state root submitted to Ethereum L1 is correct, optimistic rollups take a social-economic fault proving (a.k.a. fraud proving) approach. In this model, transactions are generally assumed to be valid unless proven otherwise (hence the term “optimistic”).

To detect faults, verifier nodes will watch the sequencers and compare the new state root they compute themselves to the one submitted by the sequencer. If there is a difference, they will initiate what is called a fault proving process. If the fault proving mechanism proves that the actual state root is different from the one submitted by the sequencer, the sequencer’s stake (a.k.a. bond) will be slashed (similar to slashing in PoS networks). However this only holds true if the sequencer is actually required to put up a stake, which in a scenario where the sequencer is run centrally by the team, is generally not the case. The state roots from the fraudulent/faulty transaction onward will be erased and the sequencer will have to recompute the lost state roots (more details on this later).

Validity rollups (also called zero knowledge rollups) on the other hand rely on validity proofs in the form of zero knowledge proofs (e.g. SNARKs or STARKs) instead of fault proving mechanisms. Similar to optimistic rollup systems, a sequencer collects user transactions and is responsible for submitting the validity proof to L1. Depending on the design, the sequencer’s stake can also be slashed if they act maliciously, which incentivizes them to post valid blocks (and proofs). The prover (a role that doesn’t exist in the optimistic setup) generates unforgeable proofs of the execution of transactions, proving that these new states and executions are correct.

The sequencer subsequently submits these validity proofs to L1, providing it to the verifier smart contract on Ethereum mainnet in the form of a verifiable hash. The validity proof is also posted to the data availability/consensus layer alongside the transaction data, enabling state reconstruction in case something goes wrong on the L2. Theoretically, the responsibilities of sequencers and provers can be combined into one role. However, because proof generation and transaction ordering each require highly specialized skills to perform well, splitting these responsibilities prevents unnecessary centralization in a rollup’s design.

Determining which state validation mechanism is superior is difficult. However, let's briefly explore some key differences:

Firstly, because validity proofs can be mathematically proven, the Ethereum network can verify the legitimacy of batched transactions instantly and in a trustless manner through a verifier contract. This differs from optimistic rollups, where Ethereum relies on verifier nodes to validate transactions and execute fault proofs if necessary. Hence, some may argue that validity rollups are more secure. Furthermore, the instant confirmation of rollup transactions on the main chain allows users to transfer funds seamlessly between the rollup and the base blockchain (as well as other zk-rollups) without experiencing friction or delays. In contrast, optimistic rollups impose a waiting period before users can withdraw funds to L1 (7 days in the case of Optimism & Arbitrum), as the verifiers need to be able to verify the transactions and initiate the fault proving mechanism if necessary. While there are ways to enable fast withdrawals, it is generally not a native feature and these mechanisms often come with additional trust assumptions for the user.

However, validity proofs are computationally expensive to generate and often costly to verify on-chain (depending on the proof size). By abstracting proof generation and verification, optimistic rollups gain an edge on validity rollups in terms of cost.

Rollup Limitations & Issues

But while rollups hold great promise with regards to solving the scalability issues Ethereum is facing, it is still a very nascent scaling technology that does have some limitations to overcome. This section focuses on the problems that rollup based scaling solutions have to solve and the approaches that can potentially help to do so. Please note that this data availability focused section is an excerpt from an in-depth research report written by zerokn0wledge for Castle Capital.

Data Availability

The Data Availability Problem

The Data Availability problem refers to the question of how peers in a blockchain network can be sure that all the data of a newly proposed block is actually available. If the data is not available, the block might contain malicious transactions which are being hidden by the block producer. Even if the block contains non-malicious transactions, hiding them might compromise the security of the system, as nobody can verify the state of the chain anymore. Therefore, one can’t be sure all transactions are actually correct. So in other words, data availability refers to the ability of all participants (nodes) to access and validate the data on a network. This is a prerequisite for functioning blockchains or L2 scaling solutions, and ensures that the networks are transparent, secure, and decentralized.

This data availability problem is especially prominent in the context of rollup systems, which inherit security from the base layer they are built upon, where they post the transaction data to. It is hence very important that sequencers make transaction data available because DA is needed to keep the chain progressing (ensure liveness) and to catch invalid transactions (safety in the optimistic case). Additionally, data needs to be available to ensure that the sequencer (basically the rollup block producer) doesn’t misbehave and exploit its privileged position and the power it has over transaction ordering.

Data availability poses limitations to contemporary rollup systems: even if the sequencer of a given L2 were an actual supercomputer, the number of transactions per second it can actually process is limited by the data throughput of the underlying data availability solution/layer it relies on. Put simply, if the data availability solution/layer used by a rollup is unable to keep up with the amount of data the rollup’s sequencer wants to dump on it, then the sequencer (and the rollup) can’t process more transactions even if it wanted to.

Hence, strong data availability guarantees are critical to ensure rollup sequencers behave. Moreover, maximizing the data throughput of a data availability solution/layer is crucial to enable rollups to process the number of transactions necessary to become execution layers supporting applications ready for mass adoption.

How can we ensure that data on a given data availability layer is actually available, or in other words, that a sequencer has actually published complete transaction data?

The obvious solution would be to simply force the full nodes in the network to download all the data dumped onto the DA layer by the sequencer. However, this would require full nodes to keep up with the sequencer’s rate of transaction computation, thereby raising the hardware requirements and consequently worsening the network’s decentralization (as barriers of entry to participate in block production/verification increase).

Not everyone has the resources to spin up a high-spec block-producing full node. But blockchains also can’t scale with low validator requirements. Consequently there is a conflict here between scalability and decentralization. Additionally, centralizing forces in PoS networks (as take naturally concentrates to a small number of validators), augmented by centralization tendencies caused by MEV, lead researchers to the conclusion, that blockchains and decentralized validator sets don’t guarantee all the nice security properties, we hoped for in the first place. Hence, many researchers argue that the key to scaling a blockchain network while preserving decentralization is to scale block verification and not production. This idea follows the notion that if actions of a smaller group of consensus nodes can be audited by a very large number of participants, blockchains will continue to operate as trustless networks, even if not everyone can pariticpate in block production (consensus). This has been the core thesis of Vitalik’s endgame article (December 2021), where he states:

“Block production is centralized, block validation is trustless and highly decentralized, and censorship is still prevented.”

Luckily, there are approaches that address the data availability issue by leveraging error correcting techniques like erasure coding and probabilistic verification methods such as availability sampling (DAS). These core concepts play a key role in building scalable networks that can support the data availability needs of rollup-based execution layers.

Data Availability & Calldata on Ethereum

Before diving into these concepts, let’s have a look at the status quo of data availability on Ethereum to understand why these solutions are actually needed.

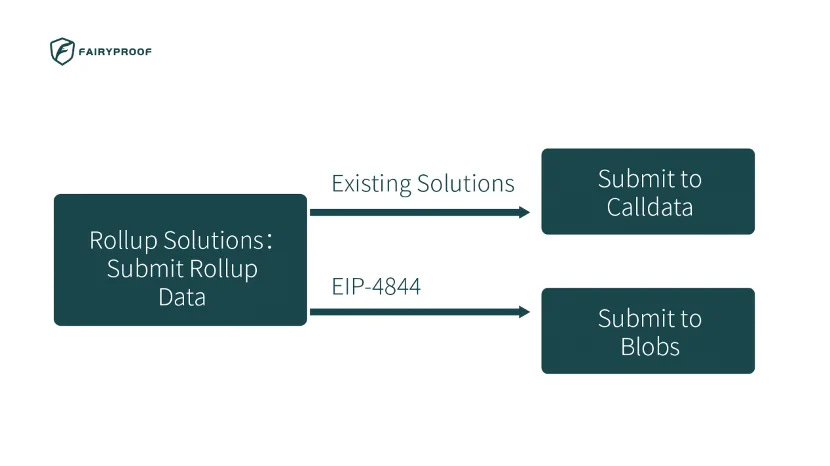

As already mentioned in the previous section, the capacity of the DA layer to handle the data that a rollup sequencer posts onto the DA layer is crucial. Currently, rollups utilize calldata to post data to Ethereum L1, ensuring both data availability and storage. Transaction calldata is the hexadecimal data passed along with a transaction that allows us to send messages to other entities or interact with smart contracts.

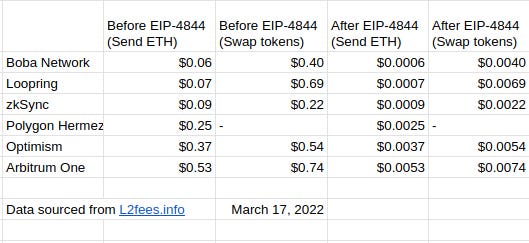

However, calldata is limited to ~10KB per block and with a fixed price of 16 gas / byte is very expensive. The limited data space on Ethereum and the high cost of L1 block space limit rollup scalability and currently are the main bottlenecks L2 scaling solutions face. To quantify this, call data cost accounts for 90% of rollup transaction cost.

To overcome these limitations and reduce transaction costs on their execution layer, some L2 teams have come up with the idea of using off-chain DA solutions, instead of posting the data to Ethereum L1. This can be a fully centralized solution (such as a centralized storage system) or what is referred to as a data availability committee (DAC).

In the case of a DAC, the protocol relies on a set of trusted entities that guarantee to make data available to network participants and users. When we remove data availability requirements from Ethereum, the transaction cost of the L2 becomes much cheaper.. However, having a DA off-chain comes with a trade-off between cost and security. While Ethereum L1 provides very high liveness and censorship resistance guarantees, off-chain solutions require trust in parties that are not part of the crypto-economic security system that secures Ethereum mainnet.

Not surprisingly, there are initiatives aiming to reduce the cost rollups incur when posting data to L1. One of them is EIP-4488, which reduces the calldata cost from 16 to 3 gas per byte, with a cap on calldata per block to mitigate the security risks arising from state bloat. However, it does not directly increase L1 data capacity limit, but rather balances cost of L1 execution with cost of posting data from L2 execution layers in favor of rollups, while retaining the same maximum capacity. This rebalancing means that the average data usage of available capacity will go up, max capacity doesn't. The need to increase max capacity still remains, however, at least in the short term, EIP-4488 allows us to enjoy lower rollup fees (as it could reduce costs by up to 80%).

The long-term solution to this is Danksharding. The mid-term, intermediate solution on the other hand is EIP-4844, which aims to increase the blockspace available to the rollups. EIP-4844 is expected to massively reduce the rollup cost base. But more on this in the next section.

EIP-4844 a.k.a. Proto-Danksharding

With transaction fees on L1 spiking with increased on-chain activity, there is great urgency to facilitate an ecosystem-wide move to rollup-based L2 solutions. However, as already outlined earlier in this report, rollups need data. Right now the cheapest data option for rollup is calldata. Unfortunately, this type of data is very expensive (costing 16 gas per byte).

So how can this issue be addressed? Simply lowering calldata costs might not be a good idea: as it would increase the burden on nodes. While archive nodes need to store all data, full nodes store the most recent 128 blocks. An increased amount of calldata to store would therefore increase storage requirements even more, worsening decentralization and introducing bloat risks if the amount of data dumped onto L1 continues to increase. At least not before Ethereum has reached the ETH 2.0 stage that is referred to as “The Purge”. This involves a series of processes that remove old and excess network history and simplify the network over time. Aside from reducing the historical data storage, this stage also significantly lowers the hard disk requirements for node operators and the technical debt of the Ethereum protocol.

Nevertheless, rollups are already significantly reducing fees for many Ethereum users. Optimism and Arbitrum for example frequently provide fees that are ~3-10x lower than the Ethereum base layer itself. Application-specific validity rollups, which have better data compression, have achieved even lower fees in the past.

Similarly, Metis, an unconventional optimistic rollup solution, has achieved 0.01$ transaction fees by implementing an off-chain solution for DA & data storage. Nontheless, current rollup fees are still too expensive for many use cases (or in the case of Metis they come with certain security tradeoffs).

The long-term solution to this is danksharding, a specific implementation of data sharding, which will add ~16 MB per block of dedicated data space that rollups could use. However, data sharding will still take some time until it’s fully implemented and deployed.

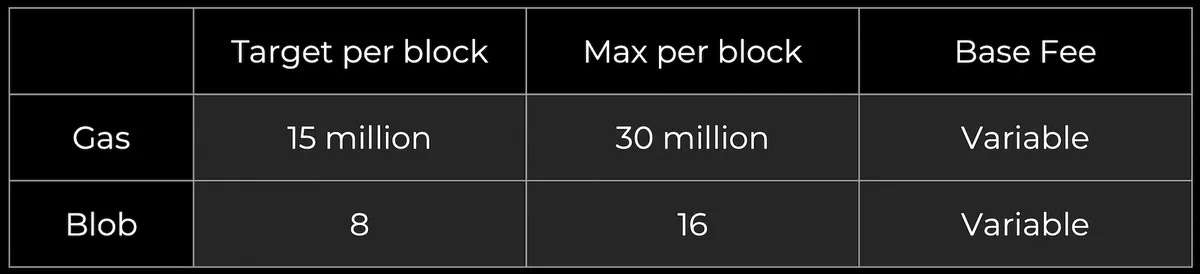

Until that stage, EIP-4844 provides an interim solution by implementing the transaction format that will be used in danksharding (data blobs), without actually implementing any sharding on the validator set. Instead,data blobs (Binary Large Objects) are simply part of the beacon chain and are fully downloaded by all consensus nodes.

To prevent state bloat issues, these data blobs can be deleted after one month. The new transaction type, which is referred to as a blob-carrying transaction, is similar to a regular transaction, except it also carries these extra pieces of data called blobs. Blobs are extremely large (~125 kB), and are much cheaper than similar amounts of call data. This EIP-4844 primarily addresses L2 transaction fee issues and is expected to have a large impact on those (see figure 3).

So, EIP-4844 (also referred to as proto-danksharding) is a proposal to implement most of the groundwork (eg. transaction formats, verification rules) of full danksharding and is a pre-stage to the actual danksharding implementation. Rollups will have to adapt to switch to EIP-4844, but they won't have to worry about adapting more stuff when full sharding will be rolled out, as it will use the same transaction format.

In a proto-danksharding paradigm, all validators and users still have to directly validate the availability of the full data. Because validators and clients still have to download full blob contents, data bandwidth in proto-danksharding is targeted to 0.375 MB/block instead of the full 16 MB that are targeted with danksharding.

However, this already allows for significant scalability gains as rollups won’t be competing for this data space with the gas usage of existing Ethereum transactions. Basically, this new data type is coming with its own independent EIP-1559-style pricing mechanism. Hence, layer 1 execution can keep being congested and expensive, but blobs themselves will be super cheap for the short to medium term.

While nodes can safely remove the data after a month, it's extremely unlikely for it to truly be lost. Even a single commitment can confirm that "this blob was committed inside block X” to prove to everyone else that it’s true (this is the 1-of-N trust assumption). Block explorers will likely keep this data, and rollups themselves will have an incentive to keep it too since they might need it. In the long term, rollups are likely to incentivize their own nodes to keep relevant blobs accessible.

Rollup-Centric Ethereum 2.0 & Danksharding

Alongside the Proof of Stake (PoS) consensus mechanism, the other central feature in the ETH 2.0 design is Danksharding. While in the old ETH 2.0 roadmap the key idea of how to scale the network was execution sharding, where L1 execution was split among a set of 64 shards, all capable general-purpose EVM execution, Ethereum has pivoted to a rollup-centric roadmap, introducing a limited form of sharding, called data sharding: these shards would store data, and attest to the availability of ~250 kB-sized blobs of data (double the size of EIP-4844 blobs), transforming the Ethereum base layer into a secure, high-throughput data availability layer for rollup-based L2s.

In a post in the Ethereum Research forum, Vitalik Buterin pointed out how this differs from execution sharding and how rollups fit into the data sharding approach:

But let’s have a look at how data sharding (more specifically danksharding) works in detail. As we know from earlier sections, making data available is a key responsibility of any rollup’s sequencer and ensures security & liveness of the network. However, keeping up with the amount of data that sequencers post onto the data availability layer can be challenging, especially if we want to preserve the network’s decentralization.

Under the Danksharding paradigm we use two intertwined techniques to verify the availability of high volumes of data without requiring any single node to download all of it:

Attestations by randomly sampled committees (shards)

Data availability sampling (DAS) on erasure coded blobs

Suppose that:

You have a big amount of data (e.g. 16 MB, which is the average amount that the ETH 2.0 chain will actually process per block initially)

You represent this data as 64 “blobs” of 256 kB each.

You have a proof of stake system, with ~6400 validators.

How do you check all of the data without…

…requiring anyone to download the whole thing?

…opening the door for an attacker who controls only a few validators to sneak an invalid block through?

We can solve the first problem by splitting up the work: validators 1…100 have to download and check the first blob, validators 101…200 have to download and check the second blob, and so on. But this still doesn’t address the issue that the attacker might control some contiguous subset of validators. Random sampling solves the second issue by using a random shuffling algorithm to select these committees.

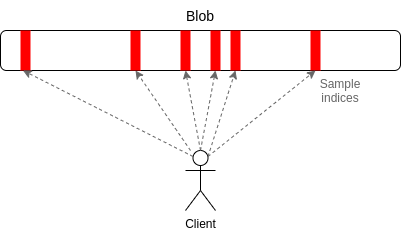

DAS is in some ways a mirror image of randomly sampled committees. There is still sampling going on, in that each node only ends up downloading a small portion of the total data, but the sampling is done client-side, and within each blob rather than between blobs. Each node (including client nodes that are not participating in staking) checks every blob, but instead of downloading the whole blob, they privately select N random indices in the blob (e.g. N = 20) and attempt to download the data at just those positions.

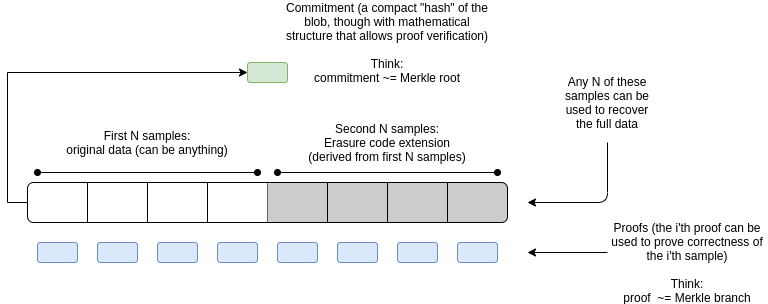

To cover the extreme case where an attacker only makes 50-99% of the data available, Danksharding relies on a technology called erasure coding, an error correction technique. The key property is that, if the redundant data is available, the original data can be reconstructed in the event some part of it gets lost. Even more importantly though, it doesn’t matter which part of the data is lost: as long as X% (tolerance threshold) of the data is available, the full original data can be reconstructed.

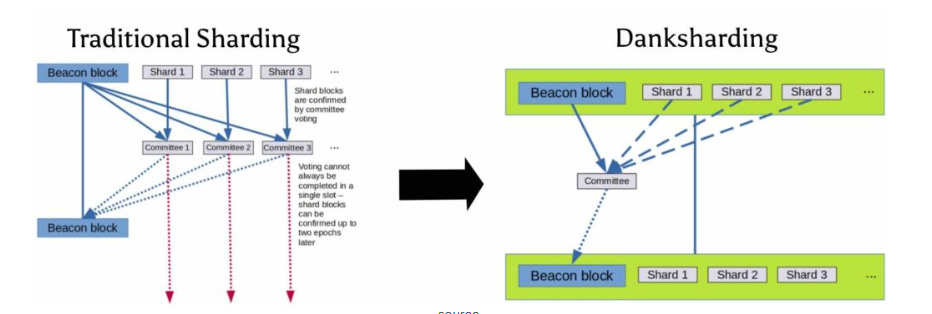

Rollups under the Danksharding paradigm offer similar scalability to the computational shards in the old roadmap. This is possible thanks to the final piece of the puzzle: data shards. Data sharding essentially means that not every validator will continue to download the same transaction data as nodes do currently (validators also have to run nodes). Instead, Ethereum will essentially split its network of validators into different partitions (or committees) called “shards.”

Let’s say Ethereum has 1000 validators that currently store the same transaction data. If you split them into 4 groups of 250 validators each that will now store different data, you have suddenly quadrupled the amount of space available for rollups to dump data to.

This introduces a new problem. If validators within a shard will only download and store the transaction data that is dumped to their shard, validators within one shard won’t have guarantees that the entirety of the data dumped by a sequencer was indeed made available. They would only have guarantee for their shards, but not that the rest of the data was made available to other shards.

For that reason, we run into a situation where validators in one shard cannot be sure that the sequencer was not misbehaving because they do not know what is happening in other shards. Luckily, this is where the concept of DAS comes in. If you are a validator in one shard, you can sample for data availability using data availability proofs in every other shard! This will essentially give you the same guarantees as if you were a validator for every shard, and thereby allowing Ethereum to safely pursue data sharding.

With executable shards (the old vision of 64 EVM shards) on the other hand, secure cross-shard interoperability was a key issue. While there are schemes for asynchronous communication, there’s never been a convincing proposal for true composability. This is different in a scenario where Ethereum L1 acts as a data availability layer for rollups. A single rollup can now remain composable while leveraging data from multiple shards. Data shards can continue to expand, enabling faster and more rollups along with it. With innovative solutions like data availability sampling, extremely robust security is possible with data across up to a thousand shards.

Now that we understand the concept of data sharding, let’s have a look at how the specific danksharding implementation looks like. To make the data sharding approach outlined in the previous section feasible on Ethereum, there are some important concepts we should be aware of.

In simplified terms, danksharding is the combination of PBS (proposer-builder separation) and inclusion lists. The inclusion list component is a censorship resistance mechanism to prevent builders from abusing their magical powers and force non-censorship of user transactions. PBS splits the tasks of bundling of transactions (building blocks) and gossiping the bundle to the network (broadcasting). There can be many builders, of course, but if all builders choose to censor certain transactions there might still be censorship risks within the system. With crList, block proposers can force builders to include transactions. So, PBS allows builders to compete for providing the best block of transactions to the next proposer and stakers to capture as much of the economic value of their block space as possible, trustlessly and in protocol (currently this happens out of protocol).

On a high level, proposers collect transactions from the mempool and create a “crList”, which is essentially a list that contains the transaction information to be included in the block. The proposer conveys the crList to a builder who reorders the transactions in the crList as they wish to maximize MEV. In this way, although block proposers have no say when it comes to how transactions are ordered, proposers can still make sure all transactions coming from mempool enter the block in a censorship-resistant manner, by forcing builders to include them. In conclusion, the proposer-builder separation essentially builds up a firewall and a market between proposers and builders.

Additionally, in Danksharding’s latest design, the beacon blockchain will contain all the data from shards. This is achieved by having both Beacon blockchain and sharding data validated by a “committee” composed of validators (the shards). In this way, transactions from the same beacon blockchain can freely access shard data, and can be synchronized between rollups and the Ethereum base layer, greatly improving data availability. This greatly simplifies rollup structure as problems such as confirmation delay will no longer exist and opens up new possibilities of cross-rollup composability (e.g. synchronous calls of various L2s with an L2 powered but L1-based AMM contract).

Alternative DA Solutions

So the good news is that Ethereum is working on upgrades that will mitigate the DA problem on Ethereum (at least to some degree). The bad news though is that it will still take some time until EIP-4844 and especially full danksharding are implemented on Ethereum L1. And even once these solutions are implemented, DA on Ethereum might still be too limited or expensive for some highly cost-sensitive use cases. Consequently, alternative DA solutions have emerged. While covering those in depth would go beyond the scope of this report, we will briefly provide an overview here:

Data Availability Committee (DAC): DACs are committees consisting of multiple nodes run by trusted entities that guarantee to store data off-chain and ensure data availability for rollups. Nodes in DACs attest on-chain that the data of a L2 is available. DACs are utilized by projects like DeversiFi and ImmutableX, with a relatively low operation cost. However the low cost comes at a security trade-off inherent to the centralized design (if DAC goes offline, rollup won’t have access to transaction data anymore).

Celestia: Celestia operates as a modular Data Availability (DA) layer separate from Ethereum (its own PoS L1 built on Cosmos SDK), allowing for data availability attestations with more economic guarantees and less trust assumptions compared to DACs. It employs a mechanism known as Data Availability Sampling (DAS) to enable scalability & end-user verification. As it operates independently, it is a more neutral solution for DA compared to DACs.

Avail: Similar to Celestia Avail is also a modular DA layer that functions as its own proper L1. It’s built on Substrate (Polkadot framework) and uses KZG commitments instead of fault proofs to ensure security for data availability sampling light nodes.

Eigenlayer’s EigenDA: Developed by EigenLabs, EigenDA is a secure, high throughput, and decentralized Data Availability (DA) service built atop Ethereum using the EigenLayer restaking primitive. It's aimed at reducing gas fees on Layer 2s (L2s) and enhancing data availability bandwidth, thereby reducing data storage costs for Layer 2 Ethereum rollups. EigenDA is part of the broader EigenLayer ecosystem, which aims to facilitate securing middleware services like oracles, bridges or in this case a hyperscale Data Availability (DA) layer.

Decentralization of Sequencer

Another issue rollups are currently facing is decentralizing certain components in the system. The primary issue in this regard is the sequencer, which undoubtedly plays an essential role within any rollup system.

Current State of Rollup Sequencing

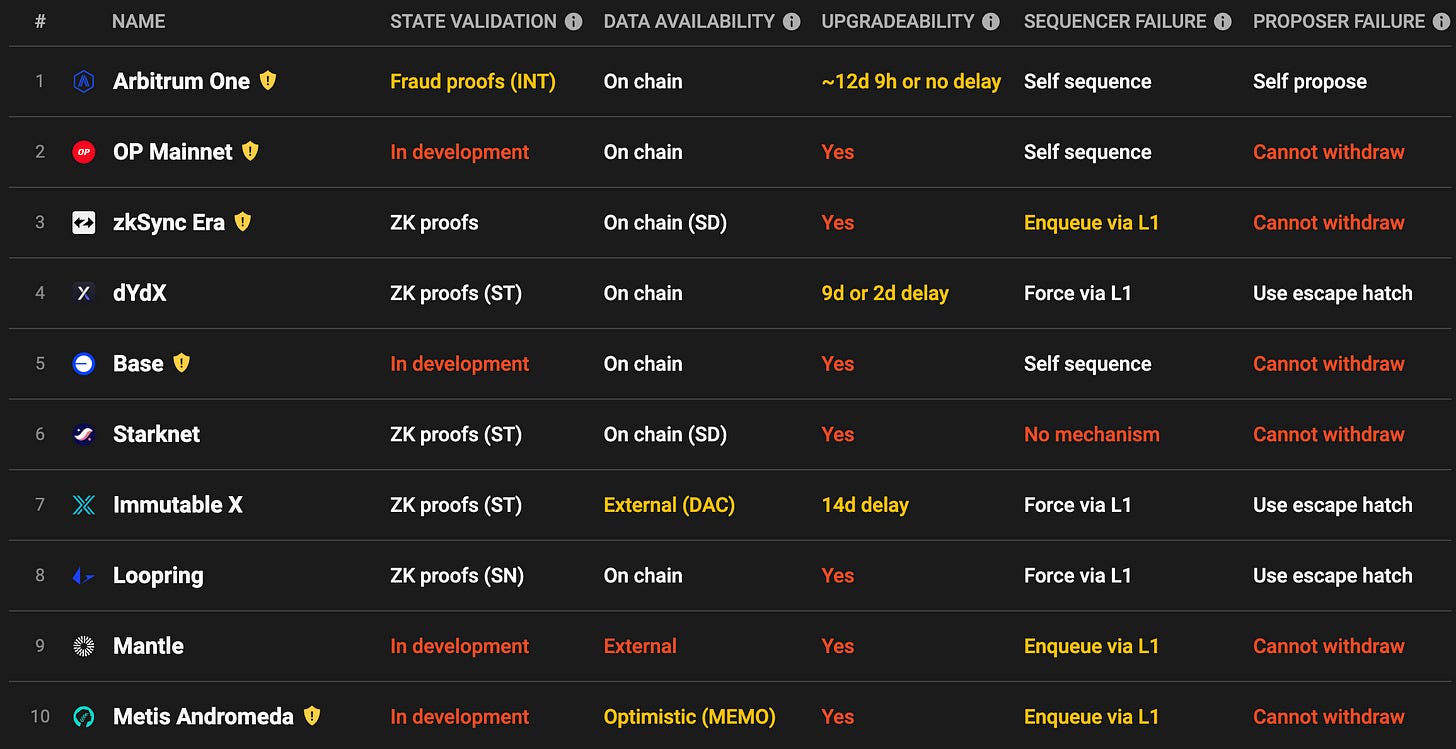

We already know what the role and responsibility of sequencers is within rollups. So let's look at the current sequencer design landscape and the tradeoffs these designs come with. L2Beat offers an oversight of the status of sequencers of the most prominent L2s out there. As is evident from the below screenshot, there is still some work to be done here. Luckily, a lot of development is happening on this as you are reading this report.

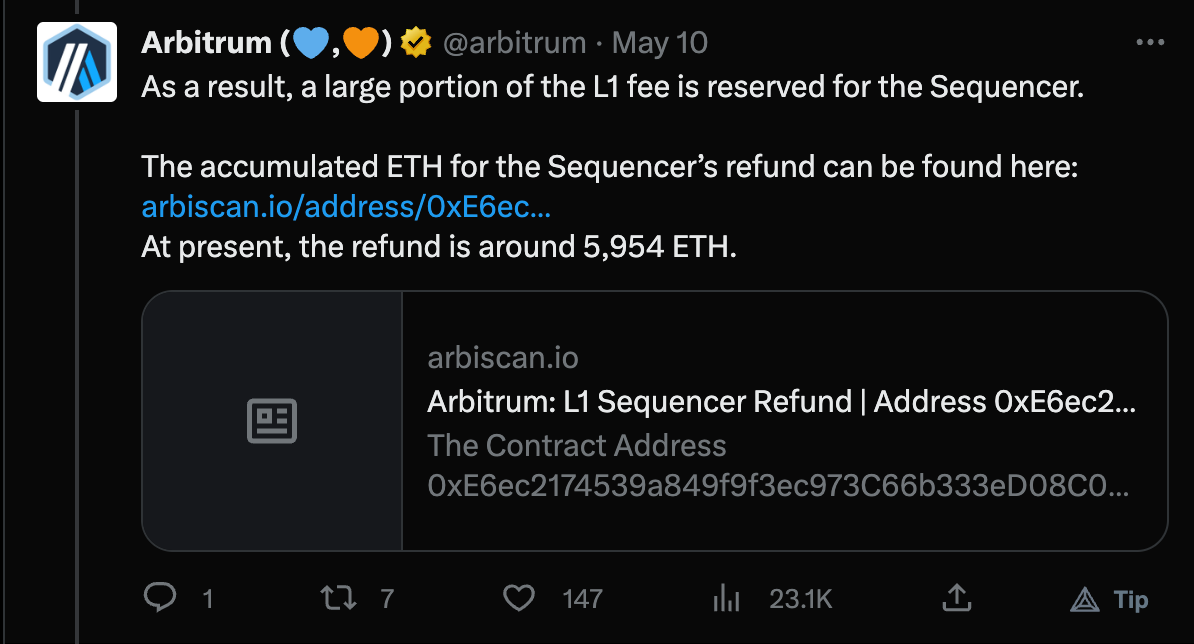

Currently, all major rollups rely on a single centralized operator, often managed by the team responsible for the respective rollup. While that makes sense in an initial stage (as it is very easy to set up & maintain), most rollups aim to decentralize the sequencer in the future. However, rollups like Arbitrum can hardly be blamed for continuously stating that they are going to decentralize their sequencer “soon”, considering the revenue it generates. Already in May 2023, there was over 5900 ETH in fee revenue reserved for the sequencer according to Arbiscan.

From a UX perspective it doesn’t really matter if the sequencer is centralized or not (centralized sequencers actually even tend to have a faster pre-confirmation time), if we assume that the sequencer is operating as intended. But having a single centralized sequencer does introduce additional trust assumptions. The issues that come with a centralized sequencer design are:

Single Point of Failure: No viable alternative if the sequencer is offline due to a variety of potential issues.

Monopolistic behavior: To maximize the profit the sequencer could artificially bloat the price that needs to be paid to get transactions included in the block.

Weak censorship resistance: There usually is not a mechanism to circumvent order exclusion or other dubious behavior.

No atomic composability: These ‘siloed’ setups need to have a cross-sequencer bridge to communicate with other rollups and won’t be atomic composable in any way.

But luckily, there are mechanisms that can help mitigate the risks caused by the centralized sequencer. Even though suboptimal, an escape hatch (like Arbitrum has implemented) could prevent transactions from being excluded in a block.

Current State of Sequencing

As rollups scale and grow, one risk attracting significant attention is the potential failure of sequencers and validators. Arbitrum for example operates a centralized sequencer, run by the Foundation, with 13 whitelisted validators exclusively running the state validation mechanism.

Summary of the current sequencer landscape. (Source: Twitter)

Like Arbitrum, Optimism operates a centralized sequencer that is run by the Optimism foundation. If the sequencer goes offline, users can still force transactions to the L2 network via Ethereum L1 though, providing users with a sort of safety net. However, Optimism plans to decentralize the sequencer in the future using a crypto-economic incentive model and governance mechanisms but the exact architecture is not agreed upon as of now (more on this later). Actually a while ago a RFP was put out by the Optimism foundation to enhance their sequencer design.

zkSync Era also has a centralized operator, serving as the network’s sequencer. Given the project's early stage, it even lacks a contingency plan for operator failure. Future plans include decentralizing the operators by establishing roles of validators & guardians though.

Finally, Polygon’s zkEVM features a centralized sequencer operated by the foundation as well. If the sequencer fails, user funds are frozen which means that users can’t access them until the sequencer is back online. However, Polygon plans to decentralize sequencing in the context of the Polygon 2.0 roadmap.

Approaches to Sequencer Decentralization

As a detailed analysis of decentralized sequencing models would go beyond the scope of this article, we won’t cover the different approaches in-depth. But it’s important to note that a decentralized sequencer is very important to address the aforementioned issues that come with a centralized sequencer design.

While we will briefly touch upon Optimism’s plans to decentralize the sequencer later on in this report, we will just focus on a short overview of other siloed (not shared) but decentralized sequencing proposals that are currently being discussed in other ecosystems:

A while ago Aztec released a RFP in which they asked the public to come up with decentralized sequencer designs. In total 8 designs were deemed interesting which can be found in this post but only 2 have been selected for further research which are Fernet and B52. Metis, another L2 working on its own sequencer, came up with a design they dubbed ‘ decentralized sequencer pool' which is part of their goal to become a fully decentralized and functional rollup.

Decentralization of State Validation Mechanism

To ensure security in the context of state validation, it’s important that proof generation (in the case of validity rollups) or fault proving mechanisms (in the context of optimistic rollups) are permissionless and decentralized. Let’s have a look at this in more detail:

Decentralized Provers in Validity Rollups:

Integrity Assurance: In Validity rollups, provers are responsible for generating proofs of validity for batches of transactions. The decentralization of provers ensures that no single entity or a small group of entities can control the validation process.

Resilience and Redundancy: Decentralization among provers also creates a system of redundancy. If a prover were to go offline or act maliciously, others in the network can continue to generate proofs and ensure the rollup can still validate transactions. This improves liveness guarantees and ensures uninterrupted service.

Competitive Verification: A decentralized network of provers promotes competition in generating validity proofs. This competition can lead to faster validation and better security as provers vie to provide accurate and timely validations to maintain their reputation and earn incentives.

Trust Minimization: The nature of decentralization minimizes trust assumptions. Users don't need to trust a single centralized entity. Instead, the mathematically-verifiable proofs generated by a decentralized network of provers provide a trustless validation system1.

Permissionless Fraud Proving in Optimistic Rollups:

Open Participation: Optimistic rollups operate on the principle of optimistic execution, where transactions are assumed to be valid unless proven otherwise. The permissionless nature allows anyone to challenge potentially faultulent transactions. This open participation is crucial for maintaining network integrity and trust (if you can’t verify that the sequencer isn’t posting invalid state roots you have to trust someone else to do so).

Incentivized Honesty: Fraud proofs not only allow for the detection and correction of faultulent activities but also incentivize honesty. Malicious actors are penalized if a fault proof succeeds, and this penalty serves as a deterrent for dishonest behavior.

Enhanced Security: Permissionless and decentralized fault proving creates a self-regulating ecosystem where malicious actions are likely to be caught and corrected. This collective vigilance enhances the security and robustness of the network.

Community-Driven Regulation: Permissionless fault proving empowers the community to act as a regulatory force. This decentralized approach to regulation promotes transparency, fairness, and a sense of collective ownership over the network's security and integrity.

Both decentralized provers in Validity Rollups and permissionless fault proving in Optimistic Rollups embody the ethos of blockchain technology, fostering a decentralized, secure, and open environment conducive for transparent interactions and scalable solutions.

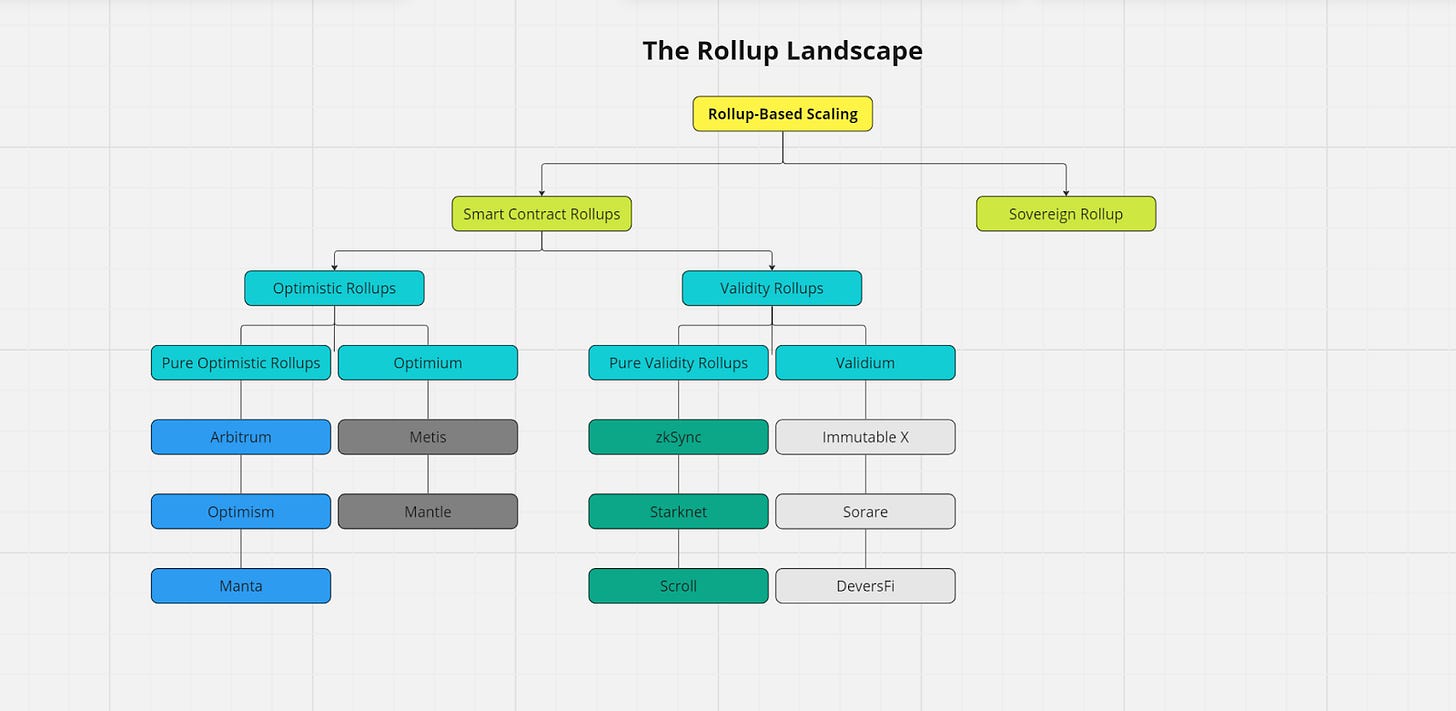

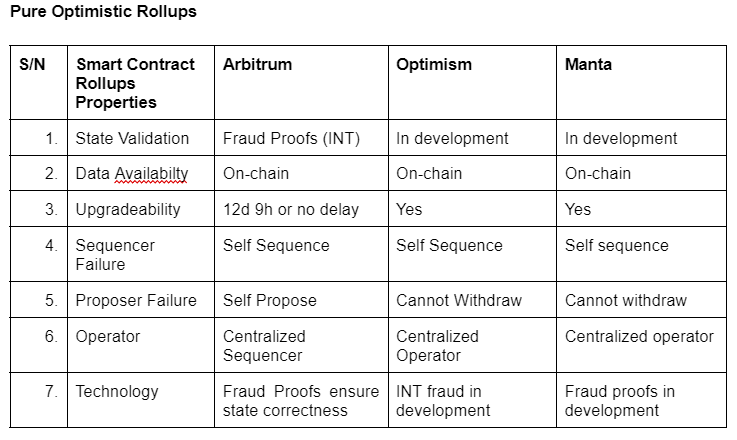

Rollup Landscape

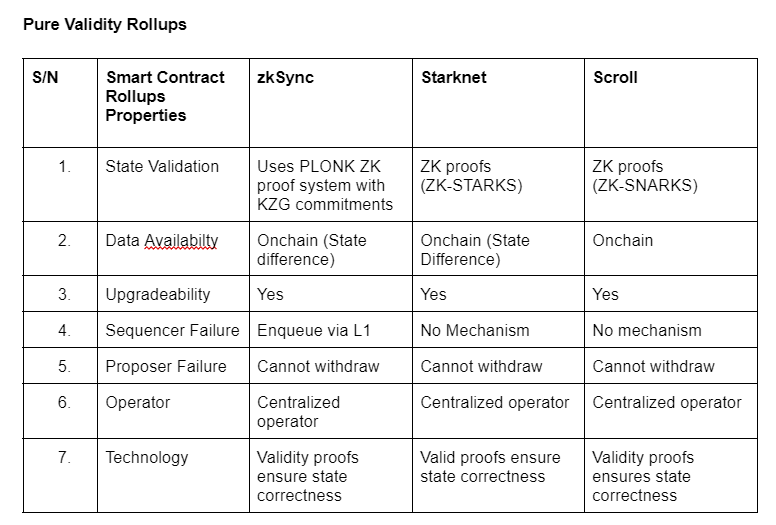

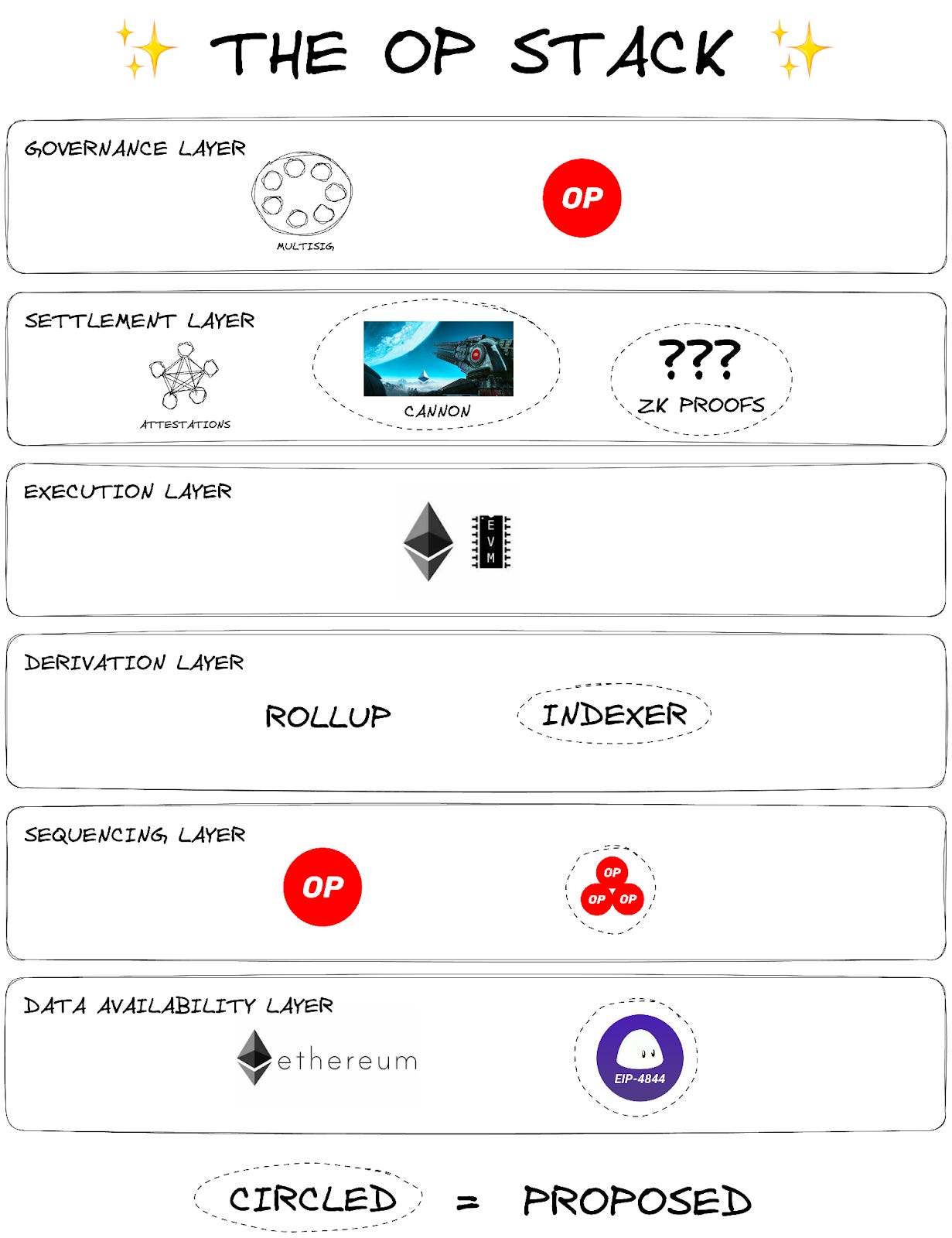

The current state of Smart Contract Rollups is subdivided into Optimistic Rollups and Validity Rollups (ZK Rollups).

Optimistic Rollups bundles and executes transaction off-chain and publish the transaction on-chain back to Mainnet where consensus is reached while Validity Rollups bundles transaction and runs computation off-chain reducing the amount of data to be published to Mainnet and submits a cryptographic proof (validity proof) to prove correctness of their changes.

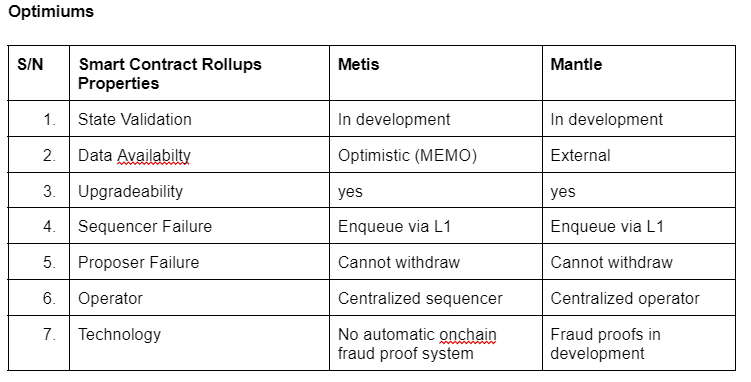

The Optimistic Rollups can further be subdivided into Pure Optimistic Rollups and Optimium.

The difference between pure Optimstic Rollups and Optimium depends on their Data Availabilty (DA) solution, as both pure Optimistic Rollups and Optimiums use fraud proof systems. But while Optimiums publish data off-chain, pure optimistic rollups publish data on-chain (to Ethereum).

Validity Rollups can be further subdivided into pure Validity Rollups and Validiums. The difference between pure Validity Rollups and Validiums is also the DA solution as pure Validity Rollups publish data on-chain, while Validiums publish data off-chain.

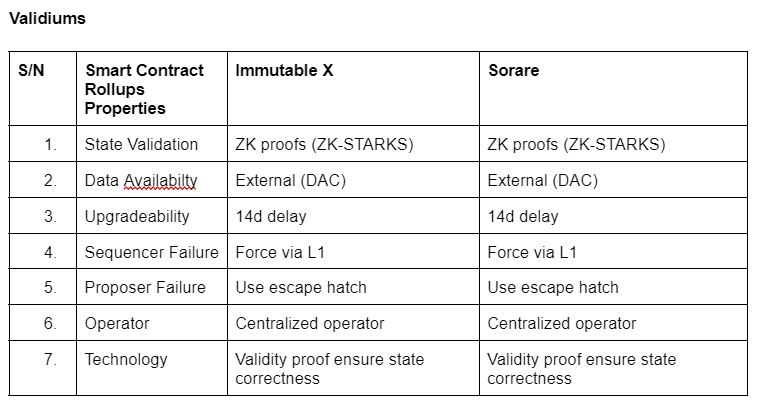

The OP Stack - Bedrock of the Ecosystem

Introduction to Optimism Bedrock

The OP Stack, as it stands, is the foundational software set that powers Optimism. It's the tech stack that the Optimism Mainnet is built on. However, its evolutionary trajectory is geared towards eventually supporting the Optimism Superchain and its associated governance. Oversight and continual refinement of the OP Stack are diligently carried out under the guidance of the Optimism Collective. The overarching vision of the OP Stack is to position it as a public good, bringing value to both the Ethereum and Optimism communities.

A crucial USP of the OP Stack is its versatility. While its contemporary design has been a big step in simplifying the process of creating L2 blockchains, it doesn't singularly define the OP Stack. Rather, the OP Stack encapsulates all the software components that exist in the Optimism universe. And, as Optimism evolves, so does the OP Stack.

The OP Stack is composed of software elements that either distinctly define a layer within the Optimism ecosystem or can be integrated as a module within these layers. At the center stands infrastructure that facilitates the operation of L2 blockchains. However, it goes beyond the foundational layers of a blockchain as higher layers might include tools such as block explorers, message passing mechanisms, governance systems, and more.

An intriguing architectural distinction of the OP Stack is exactly this layering. The foundational layers, for instance the Data Availability Layer, are very strictly defined. But as one moves higher up the stack, the layers like the Governance Layer tend to be more flexible and only loosely defined.

The current version and most recent iteration of the OP Stack is “Optimism Bedrock”. Bedrock equips builders with all the tools necessary to build out a production-ready optimistic rollup. The Bedrock upgrade significantly improved the OP Stack in various regards:

Reduced Transaction Costs: Transaction fees are drastically lowered by optimizing data compression and employing Ethereum for data availability, which reduces fees significantly. Additionally, the elimination of EVM execution-related gas costs during L1 data submission further lowered fees by roughly 10%.

Fast Deposit Times: Bedrock’s node software now supports L1 re-orgs, thereby drastically reducing deposit wait times from a previous maximum of 10 minutes to around 3 minutes.

Modular Proof Systems: A critical enhancement in Bedrock is its ability to detach the proof system from the main stack, allowing rollups to utilize either fault or validity proofs (such as zk-SNARKs) to verify execution validity.

Superior Node Performance: Node software performance has seen a significant boost. Nodes can now execute several transactions within a single rollup block, a departure from the previous "one transaction per block" protocol. In conjunction with improved data compression, this efficiency means state growth is cut down by an estimated 15GB annually.

Greater Ethereum Compatibility: Bedrock's design is closely aligned with Ethereum. This approach means several deviations present in the older versions have been rectified, including the one-transaction-per-block model mentioned above, unique opcodes for accessing L1 block data, separate fee structures for L1/L2 in the JSON-RPC API, and custom representations of ETH balances.

Additionally, Optimism Bedrock is an important step towards the realization of Optimism’s Superchain vision.

SystemConfig Contract: This new introduction aims to directly define the L2 chain with L1 smart contracts. The objective is to encapsulate all details defining the L2 chain, including the creation of unique chain IDs, setting block gas limits, etc.

Sequencer Flexibility: A distinctive feature in Bedrock is the capacity to designate sequencer addresses via the SystemConfig contract. This innovation introduces the concept of modular sequencing, allowing various chains with unique SystemConfig contracts to have their sequencer address determined by the deployer (more on this soon).

DA Flexibility: Bedrock also provides flexibility with regards to Data Availability (DA). This flexibility allows builders to opt for optimized DA solutions, significantly reducing cost compared to DA on Ethereum L1 (more on this soon).

Multi-Proof System Support: Bedrock will adopt Cannon as its primary fault-proof mechanism. Yet, it remains flexible, hinting at future integration with various optimistic as well as validity proof based systems in the future. This multiplicity in proof systems ensures a broader spectrum of security and optimization options, enhancing the resilience and adaptability of the network.

Breaking down the OP Stack

Sequencing

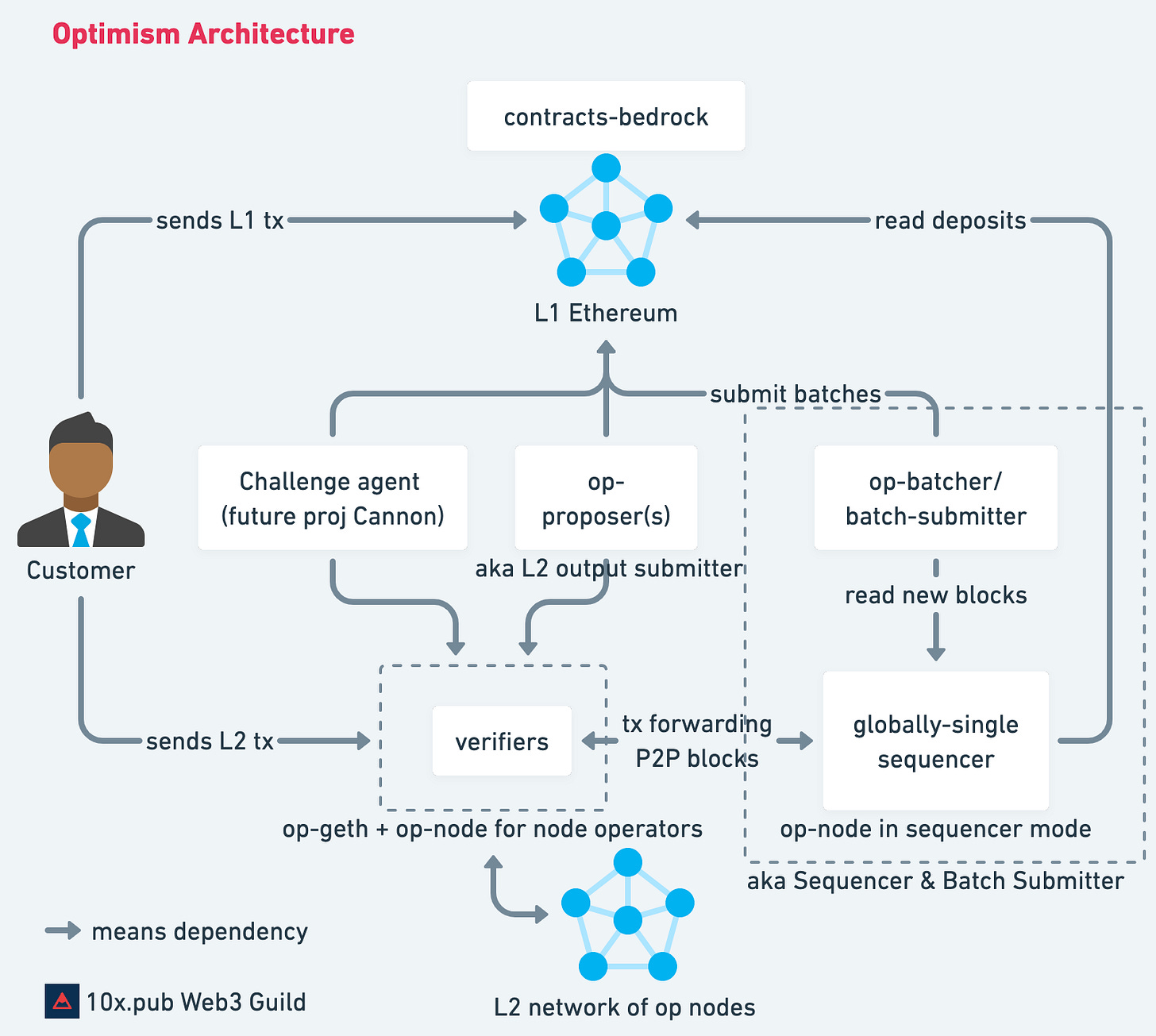

Sequencer Dynamics in Bedrock and OP Mainnet

The Sequencing Layer in the OP Stack determines how user transactions on an OP Stack chain are collected & published to the DA layer(s) in use. Central to Optimism block production is the role of the "sequencer." The sequencer provides crucial services for the network:

Providing transaction confirmations (soft confirmation) and (pre-mature) state updates.

Constructing and executing L2 blocks.

Submitting user transactions to L1.

In the Bedrock iteration of the OP Stack, the sequencer maintains a mempool, bearing similarities to that of L1 Ethereum. To ward off potential MEV exploitation threats, this mempool is kept private though. On OP Mainnet, blocks are produced every two seconds, regardless of whether they are empty, have filled to the block gas limit with transactions or anything in between.

User transactions are channeled to the sequencer via two methods:

L1 Submissions: Transactions on L1, also known as deposits (even if they don't have assets attached), are included in the right L2 block. Each L2 block is uniquely identified by its "epoch" (the associated L1 block) and its serial number within that epoch. If the sequencer attempts to censor a valid L1 transaction, it results in a state different from what the verifiers compute. The fault proof mechanism that will consequently be initiated equips OP Mainnet with L1 Ethereum level censorship resistance (as seen earlier in this report).

Direct Submissions: Transactions can be dispatched directly to the sequencer. These bypass the expenses of a separate L1 transaction but aren't censorship-resistant at the same level since only the sequencer alone of them.

At present, the sole responsibility of sequencing (a.k.a. block production) on OP Mainnet rests with the Optimism Foundation. There's a vision however to eventually decentralize the Sequencer role. We will explore this in more depth in the next section.

Progression towards a decentralized Sequencing Model

In the default rollup configuration of the OP Stack (which is what OP mainnet is built on), sequencing is typically overseen by a singular, centralized sequencer. The final vision, though, envisions sequencing as modular, allowing chains flexibility in selecting or altering their sequencer configuration. An evolutionary step from the Single Sequencer model will be to add a “multiple sequencer” module to the OP Stack. In this, the sequencer is selected from a predefined pool of possible candidates. The precise mechanisms for this set's creation and the node selection can be tailored to the needs of the individual OP Stack-based chains.

However, for now the default Sequencer module for the OP Stack (and hence OP Stack based chains) remains the single sequencer module, allowing a governance mechanism to decide the sequencer's identity. Through the SystemConfig contract, teams building on the OP Stack have the option to choose an alternative sequencing solution such as a shared sequencer (e.g. Espresso, Astria or Radius) though.

The first decentralization step of the default sequencer module aims to implement sequencer rotation. The sequencer rotation mechanism, though not finalized yet, combines an economic mechanism, promoting competitive sequencing, and a governance mechanism safeguarding the network's long-term interests. Subsequent steps aim to support multiple concurrent sequencers, drawing inspiration from standard BFT consensus protocols adopted by other L1 protocols like Polygon and Cosmos.

Shared Sequencing in the Superchain Vision

In the Superchain vision, OP-Stack based chains are conceptualized as app–specific shards of what feels to the user like a unified, logical chain. Thanks to a shared sequencer producing blocks across different OP chains, atomic interactions between these networks become feasible.

While this offers considerable potential, there are inherent challenges though, particularly since the shared sequencer doesn't actually execute the proposed transactions. The lack of execution guarantees limits the degree to which atomic composability can be leveraged within the framework. Potential solutions like Shared Validity Sequencing (SVS) and SUAVE could offer pathways to address these concerns.

Shared Validity Sequencing (SVS): Shared Validity Sequencing as proposed by Umbra Research introduces an advanced block-building algorithm tailored for the shared sequencer to ensure atomicity and conditional execution guarantees. It also postulates the Implementation of shared fault proofs among the participating rollups to enhance the integrity of cross-chain actions. While being geared primarily towards optimistic rollups, SVS can also be applied to validity rollups (that are naturally asynchronically composable) to enable synchronous composability.

Flashbot SUAVE: A solution like SUAVE can help by providing economic atomicity (as any builder basically). However, only proposers (validators) have the power to guarantee transaction inclusion. SUAVE executors are not necessarily validators of other chains, and hence can’t guarantee atomic inclusion of cross-chain transactions. Shared sequencers on the other hand act as proposers of the rollups that opt into the sequencing solution. But since the shared sequencer doesn't execute transactions, it can’t guarantee that a transaction won’t revert upon execution. That’s why shared sequencers will need stateful builders such as SUAVE to sit in front of them. SUAVE basically anticipates that different cross-chain atomicity approaches will emerge & looks to support preference expression for all of them, which basically makes SUAVE a demand-side aggregator for cross-domain preferences.

In conclusion, sequencing within the OP Stack is continually evolving, transitioning from a centralized single sequencer model towards a shared, decentralized sequencer framework, mirroring the broader aspirations of the Optimism ecosystem in line with the Superchain vision.

Execution environment

Introduction to the Execution Layer in the OP Stack

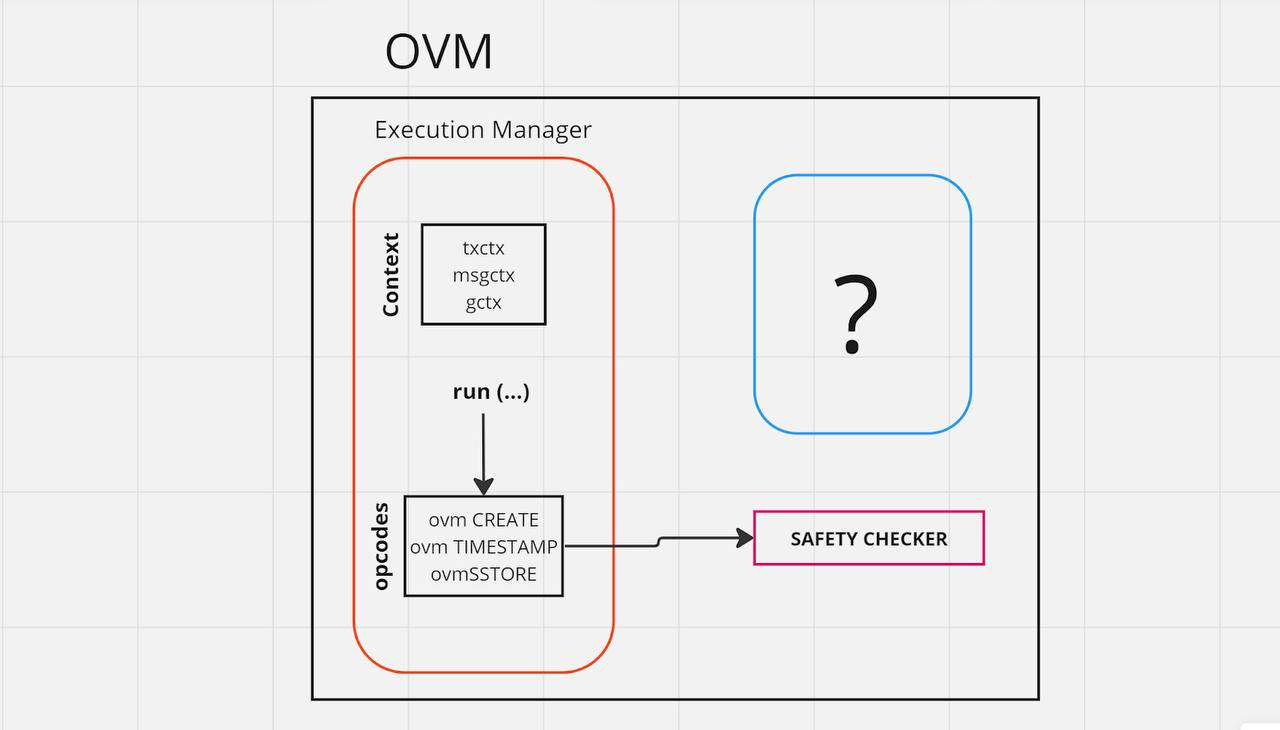

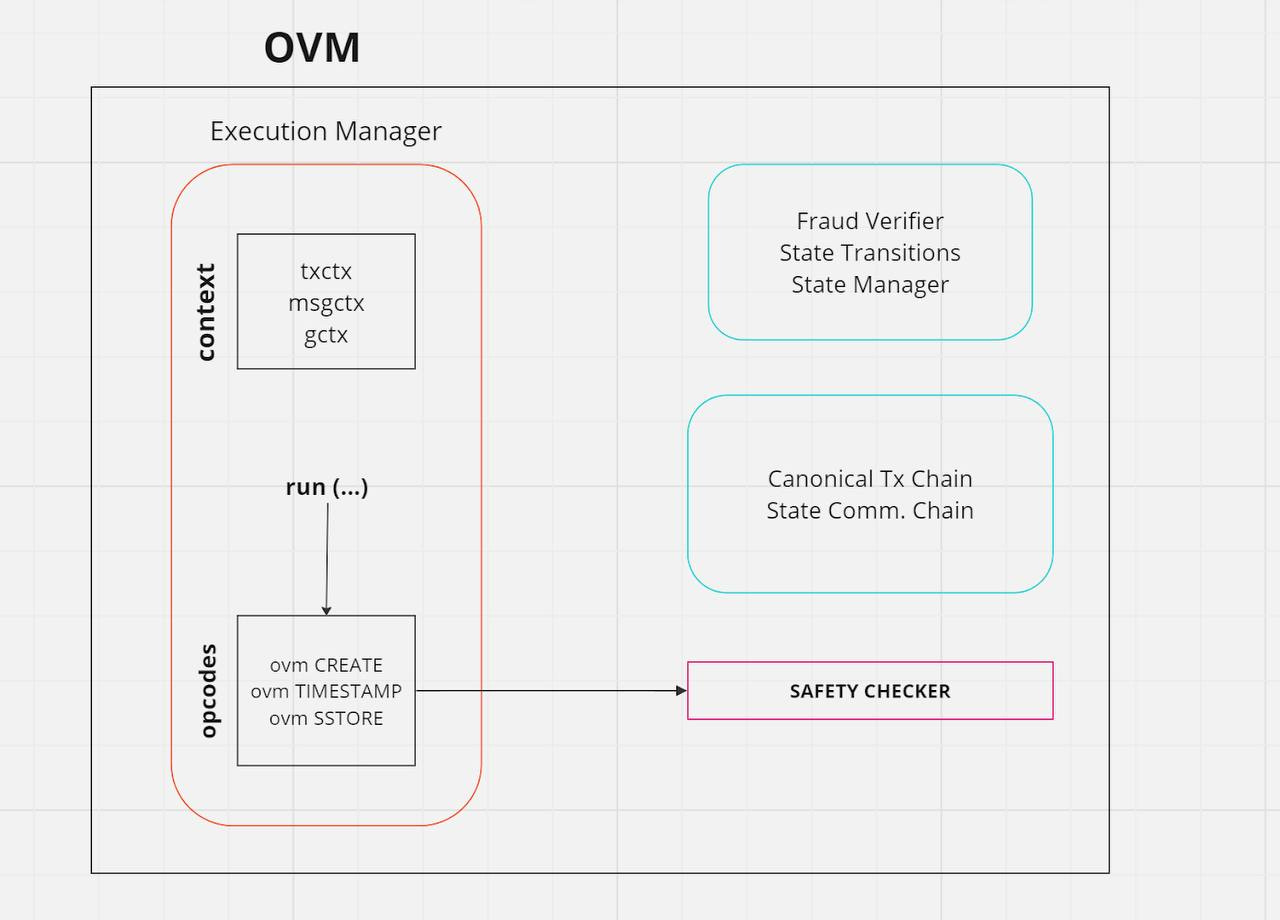

Central to the architecture of the OP Stack is the Optimistic Virtual Machine (OVM), a specialized execution layer module. Notably, it draws extensively from the Ethereum Virtual Machine (EVM) in both state representation and the state transition function, offering a harmonious integration between the two systems.

However, this is not a 1:1 copy of the EVM. Rather, it's a lightly modified version of the EVM that incorporates:

Support for L2 transactions initialized on Ethereum.

An additional L1 data fee to each transaction to offset the cost of transferring transactions to Ethereum.

The OVM doesn't rigidly enforce transaction validity. Instead, it adopts an optimistic approach, processing transactions with the assumption of their validity and leaning on the L1 chain for arbitration in cases of state transition discrepancies, known as fault proofs.

Transaction Dynamics within the OVM

Transaction processing within the OVM exhibits strong parallels with EVM transactions. A transaction can be initiated by an externally owned account (EOA) or a contract account. When initiated by EOAs, transactions can manifest as:

Asset transfers (Example: Alice transferring 5 ETH to Bob).

Contract deployments, evidenced when compiled bytecode is relayed as a data component of a transaction.

Smart contract interactions (e.g. Alice's buying 5 UNI tokens).

Contracts can initiate transactions, named “message calls” as well. These can be directed at an EOA or another contract. Furthermore, within the OVM's ecosystem, a contract can even deploy other contracts via a specialized contract-creation transaction.

Like its counterpart (the EVM), the OVM deploys the concept of “gas,” a mechanism to delineate the execution steps for each transaction and prevent potentially damaging infinite execution loops.

Bytecode & EVM Equivalence

Bytecode serves as the foundational low-level instructions, indispensable for the OVM's functioning. Prior to their deployment within the OVM, high-level EVM-compatible programming languages (such as Solidity) undergo a transformation into bytecode. This bytecode then becomes operational, executing a sequence of opcodes based on the respective transaction inputs. More specifically, Optimism uses a Solidity compiler that works by converting the Solidity to Yul and subsequently into EVM Instructions and finally bytecode

However, it's pivotal to distinguish between EVM compatibility and true EVM equivalence. While the OVM's architecture is EVM-compatible, it isn’t a perfect duplicate of the EVM. In the case of mere compatibility, developers often still need to to adjust or re-engineer the fundamental code which Ethereum-based infrastructure relies on.